- Getting Started With CWV

- Largest Contentful Paint (LCP)

- Interaction to Next Paint (INP)

- Cumulative Layout Shift (CLS)

- Web Performance Budgets

- Diagnose Time to First Byte

- Optimizing Images

- Optimizing JavaScript

- Third-Party Web Performance

- Critical CSS for Faster Rendering

- Optimizing Single Page Apps

- Caching for Faster Load Times

Continuous Web Performance Monitoring

The hardest part of web performance isn’t making your site faster. It’s keeping it fast. It's all too common to hear about a company devoting significant efforts to optimizing their site, only to find their page speed right back where it started a few months later. Continuous testing – using web performance budgets to create guardrails – is the solution.

Image: Freepik

The reality is that, as critical as site speed is, it’s also very easy to overlook. It doesn’t jump out like a blurry image or a layout issue. And the majority of modern tools and frameworks that are used to build sites today make it all too easy to compound the issue.

Making performance more visible throughout the development process is one of the most critical things a company can do. We need tools to help protect us from shipping code that will result in unexpected regressions.

Regression monitoring

The default stance of most tooling today is to make it easier to add code to your site or application at a moment’s notice. (Hey there, npm install.) What they don’t prioritize nearly as much is making you aware that you’re doing something that’s going to negatively impact your performance.

To combat that, we need to find ways to put monitoring into our workflows to help us notice when we're at risk of causing a performance regression. Luckily we have a few tools at our disposal.

Performance budgets

While a performance budget alone doesn’t ensure good performance, we have yet to see a company sustain performance over the long haul without using some sort of performance budget (whether they use that term or not).

A performance budget is a clearly defined limit on one or more performance metrics that the team agrees not to exceed. That budget is then used to alert on potential regressions, break deployments when a regression is detected, and guide design and development decisions.

Most web performance tools, like SpeedCurve, come with some default performance budgets, but you’ll want to reassess for yourself, probably on a 2-4 week basis, to ensure the budgets you set align with your reality. We’re looking for a balance of "alerts if there's a regression" and "doesn’t alert so much that we start ignoring the alerts".

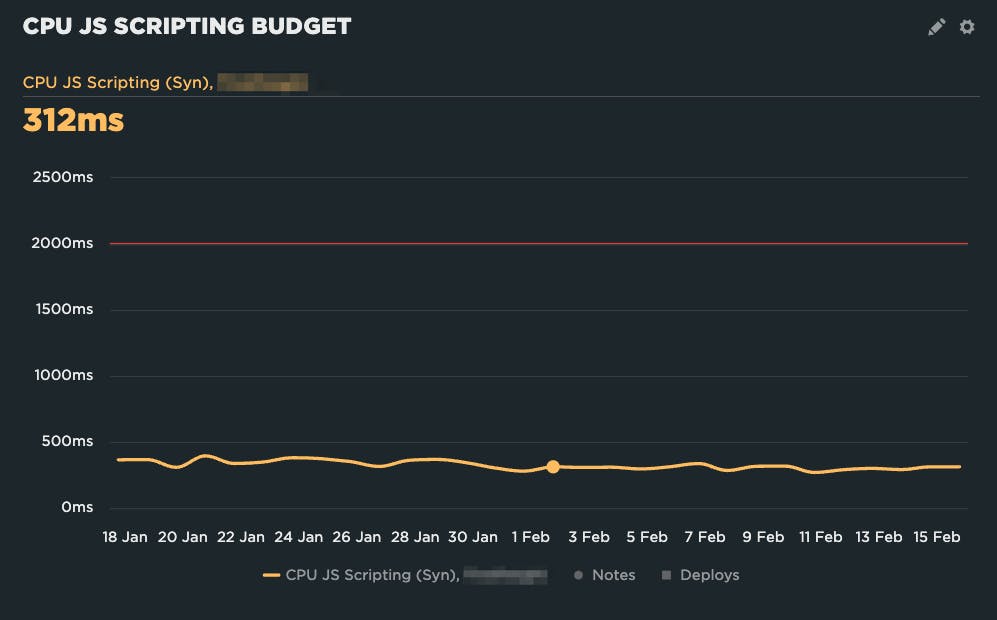

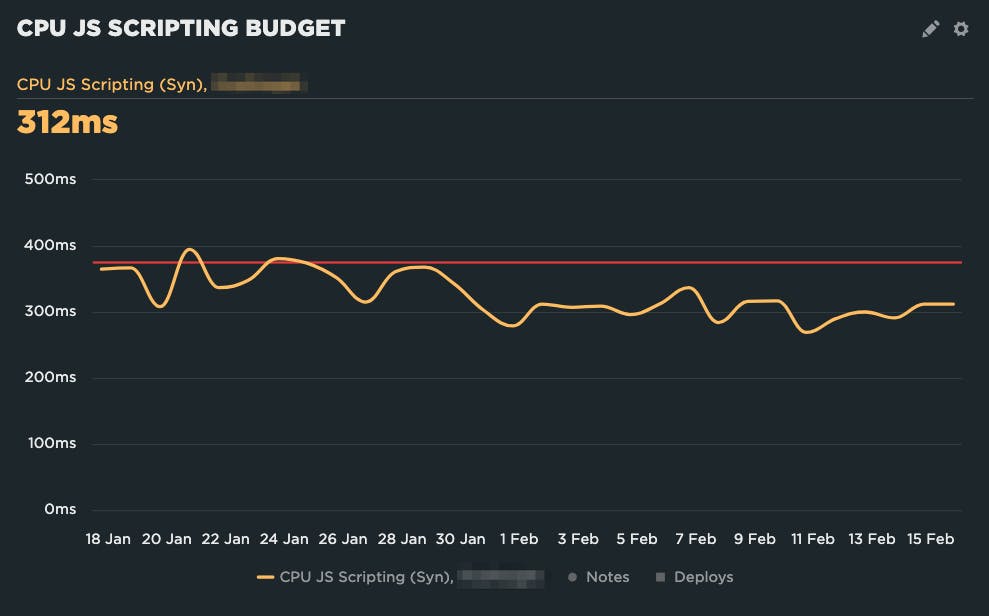

Here's an example from a recent KickStart engagement. (KickStarts are consulting projects where we help companies get set up and running with their monitoring.) The budget for JS Scripting was set to 2000ms (that’s the red line), but the site was well below budget. The budget was useless. They would have to have a regression of over 500% to trigger any sort of alert!

We ended up setting the budget at 375ms based on the last two weeks of data at the time. That enables us to get alerted on regressions without getting alert fatigue.

Looking at the chart, if they continue on the current trend, the next time they reassess their budget they may want to bring it down a little bit further, as some recent changes seem to have resulted in improvements.

Once a regression alert is received via Slack or email, the team can jump in and fix the issue before it starts to impact the broader user experience. Without fine-tuned alerting, multiple small regressions add up and can start to impact business metrics.

Automated testing of code changes

Another important guardrail is to have automated performance testing when code changes occur. Just as important, those test results should show up somewhere in the development process – where the developers are. Requiring the team to go looking for results in another tool every time adds unnecessary friction.

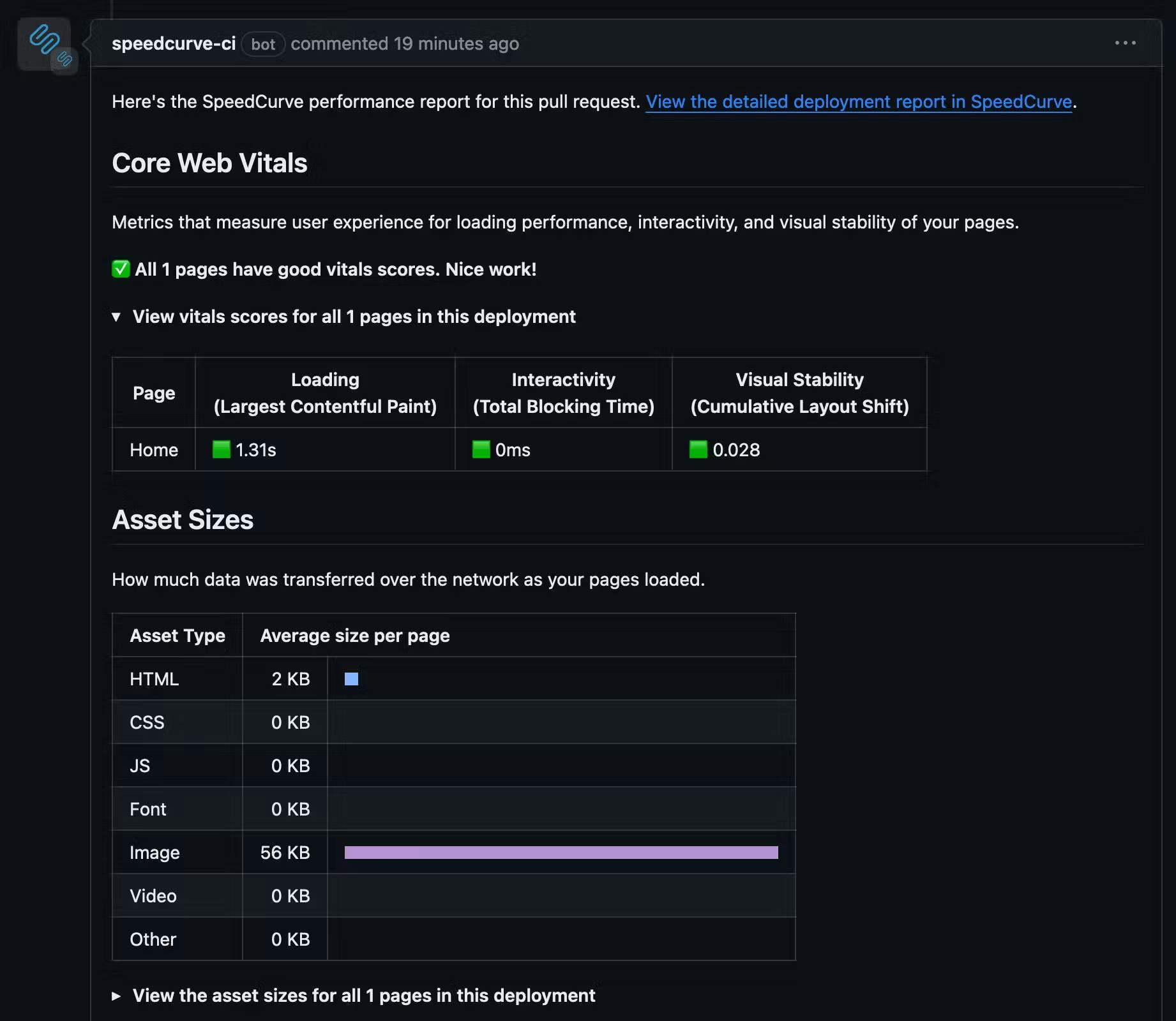

A great way to do this is to have an automated test triggered with every pull request, and then have the results put right back into the details of the pull request for easy review.

We're big fans of using CI/CD tools like a Github integration for this reason. With zero code changes (just a few button clicks to get GitHub and a tool like SpeedCurve talking to each other), you can get automated performance testing set up on every pull request. (If you’re not using GitHub, you can also use the deploy API to trigger automated tests.)

GitHub comment showing if the Core Web Vitals were passed

Now when a team makes a code change, they get immediate performance feedback right in the pull request review process, helping performance stay front of mind.

Performance guardrails

We actually don't need to log in much. We've set up performance budgets and deploy-based testing. We just wait to get alerts and then dive in to fix things.

- Patrick Hamann from Financial Times

Performance isn’t something you fix once and then walk away from. As the content and composition of our pages change, we need to make performance a continuous focus.

Performance guardrails help us get a comprehensive picture of what's happening.

- Performance budgets let us set thresholds, with alerting, on key metrics so we know right away if there's a problem

- Automated code testing puts performance information right in front of the developers, using those same performance budgets to give a clear indication of the impact of every code change

- Pairing automated testing with detailed test results ensure that developers are able to dive in and quickly identify the source of the regression

- Having annotations appear in historical charting that connects to those deployments – as well as other changes – ensures the entire team has full context in why performance may have shifted

Putting up the appropriate guardrails to protect ourselves from regressions – then pairing that with a trail of breadcrumbs so that we can dive in and quickly identify what the source is when a regression occurs – are essential steps to ensure that when we make our sites fast, they stay that way.