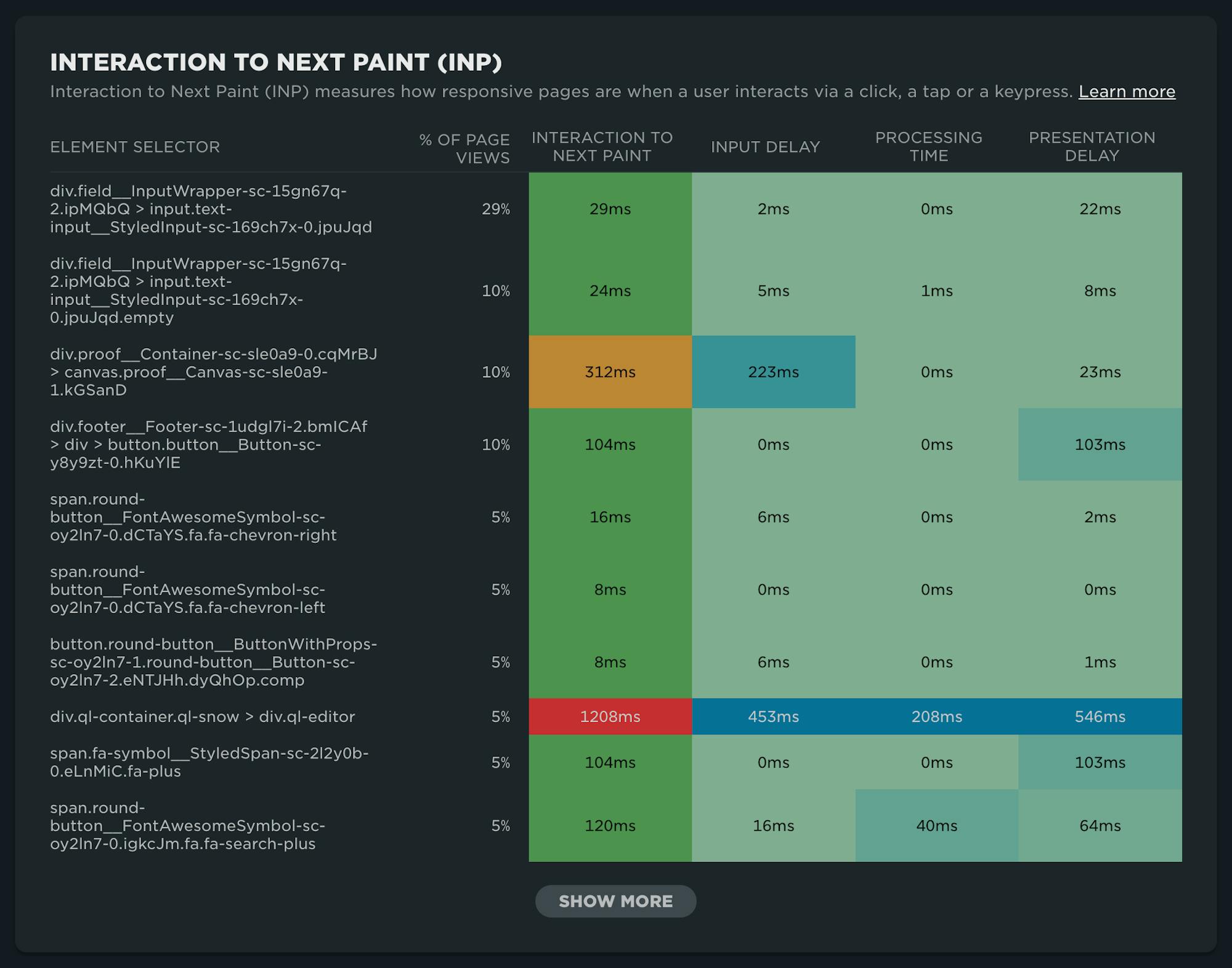

NEW: RUM attribution and subparts for Interaction to Next Paint!

Now it's even easier to find and fix Interaction to Next Paint issues and improve your Core Web Vitals.

Our newest release continues our theme of making your RUM data even more actionable. In addition to advanced settings, navigation types, and page attributes, we've just released more diagnostic detail for the latest flavor in Core Web Vitals: Interaction to Next Paint (INP).

This post covers:

- Element attribution for INP

- A breakdown of where time is spent within INP, leveraging subparts

- How to use this information to find and fix INP issues

- A look ahead at RUM diagnostics at SpeedCurve

Performance Hero: Paul Calvano

Celebrating performance wins is critical to a healthy, well-supported, high-performing team. This isn't a new idea. In fact, it's something that started in the early days of web performance when Lara Hogan, who was an engineering manager at Etsy at the time, discussed the practice of empowering people across the organization by celebrating 'performance heroes'.

In that spirit, we're reigniting the tradition of spotlighting Performance Heroes from our awesome community. It seems appropriate that we'd start with someone who is currently focused on keeping Etsy's site as fast as possible: Paul Calvano

Not only has Paul had a long career dedicated to making the web faster for some of the largest and most popular sites in the world, he is humble, incredibly talented, and one of the kindest people you'll ever meet.

Five ways cookie consent managers hurt web performance (and how to fix them)

Cookie consent popups and banners are everywhere, and they're silently hurting the speed of your pages. Learn the most common problems – and their workarounds – with measuring performance with content manager platforms in place.

I've been spending a lot of time looking at the performance of European sites lately, and have found that the consent management platforms (CMPs) consistently create a false reality for folks when trying to understand performance using synthetic monitoring. Admittedly, this is not a new topic, but I feel it's important enough that it warrants another PSA.

In this post, I will cover some of the issues related to measuring performance with CMPs in place and provide some resources for scripting around consent popups in SpeedCurve.

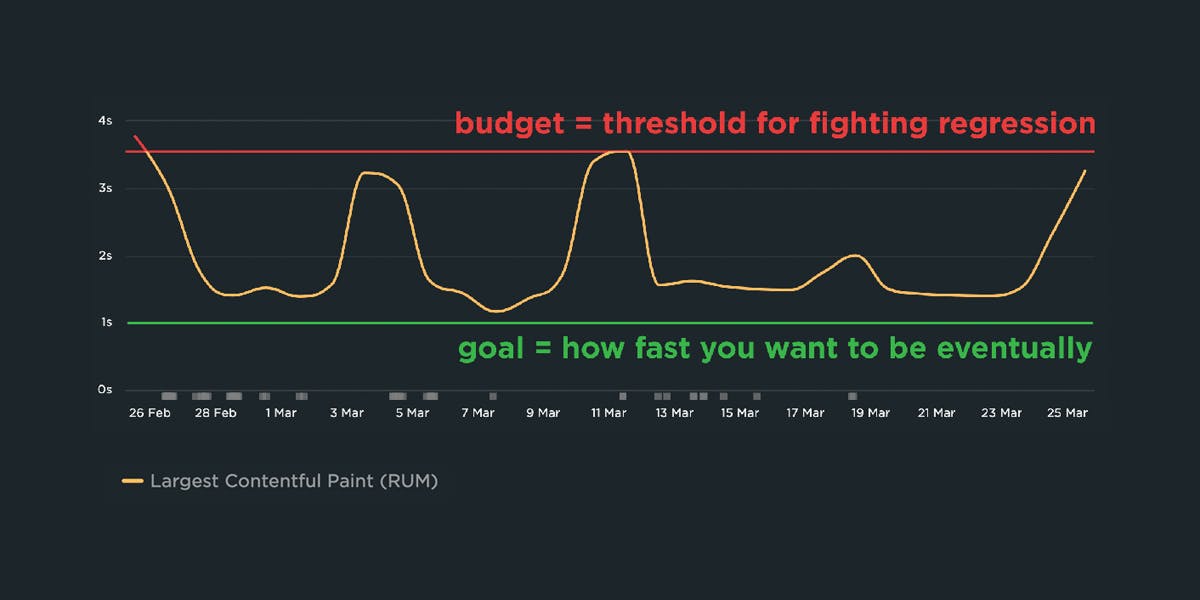

A Complete Guide to Web Performance Budgets

It's easier to make a fast website than it is to keep a website fast. If you've invested countless hours in speeding up your site, but you're not using performance budgets to prevent regressions, you could be at risk of wasting all your efforts.

In this post we'll cover how to:

- Use performance budgets to fight regressions

- Understand the difference between performance budgets and performance goals

- Identify which metrics to track

- Validate your metrics to make sure they're measuring what you think they are – and to see how they correlate with your user experience and business metrics

- Determine what your budget thresholds should be

- Focus on the pages that matter most

- Get buy-in from different stakeholders in your organization

- Integrate with your CI/CD process

- Synthesize your synthetic and real user monitoring data

- Maintain your budgets

This bottom of this post also contains a collection of case studies from companies that are using performance budgets to stay fast.

Let's get started!

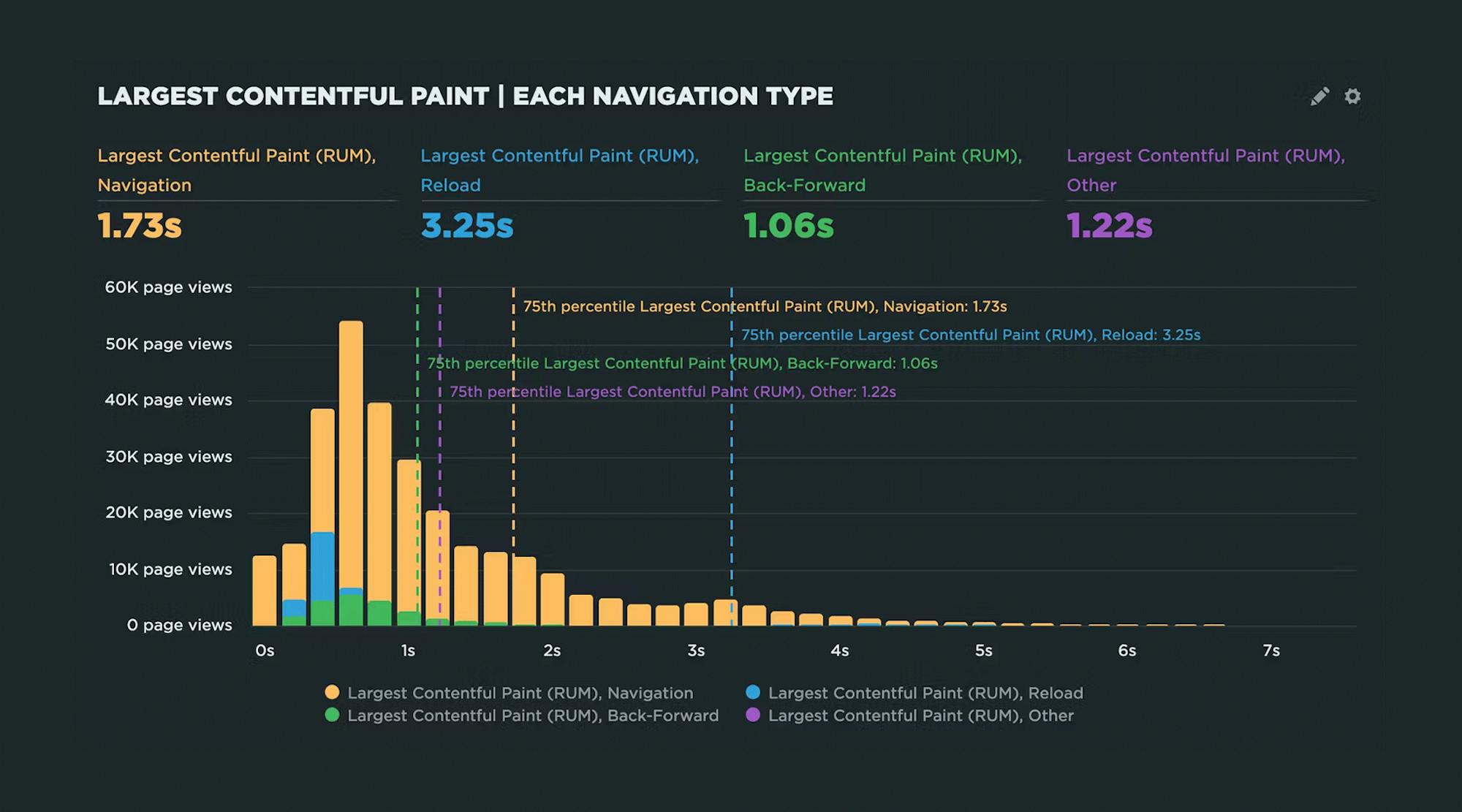

Navigate your way to better performance with prerendering and the bfcache

I was inspired by Tim Vereecke's excellent talk on noise-cancelling RUM at PerfNow this past November. In this talk, he highlighted a lot of the 'noise' that comes along with capturing RUM data. Tim's approach was to filter out the noise introduced by really fast response times that can be caused by leveraging the browser cache, prerendering, and other performance optimization techniques.

I thought Tim's focus on 'human viewable navigations' was a great approach to use when looking at how to improve user experience. But there also may be times when you want to understand and embrace the noise. Sometimes there are opportunities in the signals that we often forget are there.

In this post, I'll demonstrate how you can use SpeedCurve RUM to identify all types of navigations, their performance impact, and potential opportunities for delivering lightning-fast page speed to your users.

We'll cover things like:

- Understanding SPA navigations and performance

- Whether or not to track hidden pages (such as pages opened in background tabs)

- How to take advantage of prerendering and the back-forward cache (aka bfcache)

Hello INP! Here's everything you need to know about the newest Core Web Vital

After years of development and testing, Google has added Interaction to Next Paint (INP) to its trifecta of Core Web Vitals – the performance metrics that are a key ingredient in its search ranking algorithm. INP replaces First Input Delay (FID) as the Vitals responsiveness metric.

Not sure what INP means or why it matters? No worries – that's what this post is for. :)

- What is INP?

- Why has it replaced First Input Delay?

- How does INP correlate with user behaviour metrics, such as conversion rate?

- What you need to know about INP on mobile devices

- How to debug and optimize INP

And at the bottom of this post, we'll wrap thing up with some inspiring case studies from companies that have found that improving INP has improved sales, pageviews, and bounce rate.

Let's dive in!

Continuous performance with guardrails and breadcrumbs

The hardest part about web performance isn’t making your site faster – it’s keeping it that fast. Hearing about a company that devoted significant effort into optimizing their site, only to find their performance right back where it started a few months later, is all too familiar.

The reality is that, as critical as site speed is, it’s also very easy to overlook. It doesn’t jump out like a blurry image or a layout issue. And the majority of modern tools and frameworks that are used to build sites today make it all too easy to compound the issue.

Making performance more visible throughout the development process is one of the most critical things a company can do.

I like to think of it as setting up guardrails and breadcrumbs.

- We need guardrails to help protect us from shipping code that will result in unexpected regressions.

- We need breadcrumbs that we can follow back to the source to help us identify why a metric may have changed.

Guardrails and breadcrumbs need to work together. Setting up guardrails without the ability to dive in more will lead to frustration. Having proper breadcrumbs without guardrails in place all but assures we will constantly be fighting regressions after they’ve already wreaked havoc on our sites and our users.

Let’s take a look at both of these concepts and how they should work together.

NEW: On-demand testing in SpeedCurve!

Image by Freepik

On-demand testing has sparked a lot of discussion here at SpeedCurve over the past year. You've always had the ability to manually trigger a round of tests – based on the scheduled tests in your settings – using the 'Test Now' button. But there hasn't been a lot of flexibility to support nuanced use cases, such as...

"I just deployed changes to my site and want to check for any regressions."

"I saw a change to my RUM data and I want to see if I can replicate it with synthetic for further diagnostics."

"I have a paused site that I don't want to test regularly, but would like to test from time to time."

"Please just let me test any URL I want without setting up a site and scheduling testing."

"I need to quickly debug this script without kicking off tests for my entire site."

"I would like to get a first look at a page in order to troubleshoot regressions I saw in RUM."

Based on your feedback, we've just launched new capabilities for on-demand testing. We're pretty excited about these, and we hope you will be, too!

In this post, we'll:

- Highlight the differences between on-demand and scheduled testing

- Cover the various types of on-demand testing, including some of the more common use cases we've heard from SpeedCurve users

- Step you through running an on-demand test

Let's goooooooo!

Debugging Interaction to Next Paint (INP)

Not surprisingly, most of the conversations I've had with SpeedCurve users over the last few months have focused on improving INP.

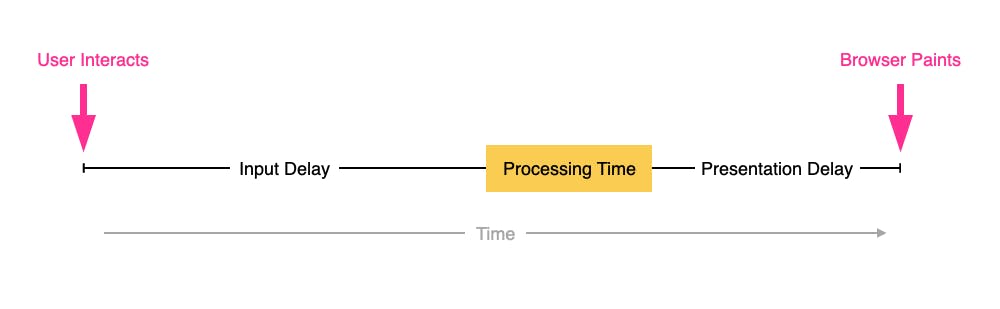

INP measures how responsive a page is to visitor interactions. It measures the elapsed time between a tap, a click, or a keypress and the browser next painting to the screen.

INP breaks down into three sub-parts

- Input Delay – How long the interaction handler has to wait before executing

- Processing Time – How long the interaction handler takes to execute

- Presentation Delay – How long it takes the browser to execute any work it needs to paint updates triggered by the interaction handler

Pages can have multiple interactions, so the INP time you'll see reported by RUM products and other tools, such as Google Search Console and Chrome's UX Report (CrUX), will generally be the worst/highest INP time at the 75th percentile.

Like all Core Web Vitals, INP has a set of thresholds:

INP thresholds for Good, Needs Improvement, and Poor

Many sites tend to be in the Needs Improvement or Poor categories. My experience over the last few months is that getting to Good is achievable, but it's not always easy.

In this post I'm going to walk through:

- How I help people identify the causes of poor INP times

- Examples of some of the most common issues

- Approaches I've used to help sites improve their INP

How to use Server Timing to get backend transparency from your CDN

80% of end-user response time is spent on the front end.

That performance golden rule still holds true today. However, that pesky 20% on the back end can have a big impact on downstream metrics like First Contentful Paint (FCP), Largest Contentful Paint (LCP), and any other 'loading' metric you can think of.

Server-timing headers are a key tool in understanding what's happening within that black box of Time to First Byte (TTFB).

In this post we'll explore a few areas:

- Look at industry benchmarks to get an idea of how a slow backend influences key metrics, including Core Web Vitals

- Demonstrate how you can use server-timing headers to break down where that time is being spent

- Provide examples of how you can use server-timing headers to get more visibility into your content delivery network (CDN)

- Show how you can capture server-timing headers in SpeedCurve