Five ways cookie consent managers hurt web performance (and how to fix them)

Cookie consent popups and banners are everywhere, and they're silently hurting the speed of your pages. Learn the most common problems – and their workarounds – with measuring performance with content manager platforms in place.

I've been spending a lot of time looking at the performance of European sites lately, and have found that the consent management platforms (CMPs) consistently create a false reality for folks when trying to understand performance using synthetic monitoring. Admittedly, this is not a new topic, but I feel it's important enough that it warrants another PSA.

In this post, I will cover some of the issues related to measuring performance with CMPs in place and provide some resources for scripting around consent popups in SpeedCurve.

How to use Server Timing to get backend transparency from your CDN

80% of end-user response time is spent on the front end.

That performance golden rule still holds true today. However, that pesky 20% on the back end can have a big impact on downstream metrics like First Contentful Paint (FCP), Largest Contentful Paint (LCP), and any other 'loading' metric you can think of.

Server-timing headers are a key tool in understanding what's happening within that black box of Time to First Byte (TTFB).

In this post we'll explore a few areas:

- Look at industry benchmarks to get an idea of how a slow backend influences key metrics, including Core Web Vitals

- Demonstrate how you can use server-timing headers to break down where that time is being spent

- Provide examples of how you can use server-timing headers to get more visibility into your content delivery network (CDN)

- Show how you can capture server-timing headers in SpeedCurve

10 things I love about SpeedCurve (that I think you'll love, too)

This month, SpeedCurve enters double digits with our tenth birthday. We're officially in our tweens! (Cue the mood swings?)

I joined the team in early 2017, and I'm blown away at how quickly the years have flown by. Every day, I marvel at my great luck in getting to work alongside an amazing team to build amazing tools to help amazing people like you!

In the spirit of celebration, I thought it would be fun to round up my ten favourite things to do in SpeedCurve (that I think you'll like, too). Keep scrolling to learn how to:

- Fight regressions and stay fast

- See the impact of performance on your business

- Benchmark your site against your competitors

- Track third parties to make sure they're not quietly hurting performance

- Make sure you're tracking the best metrics for your pages

- Get a prioritized list of performance recommendations

- Bookmark and compare synthetic tests and RUM sessions so you can quickly find and fix performance issues

- Run A/B tests so you see how code changes affect your performance and user engagement metrics

- Get customized weekly reports

- Motivate your team with a wall-mounted monitor showing your favourite charts

UPDATE: Bookmark and compare synthetic tests

One of the huge benefits of tracking web performance over time is the ability to see trends and compare metrics. Last year we added new functionality that makes it easy for you to bookmark and compare different synthetic tests in your test history. We recently added some additional enhancements to make comparing tests even easier.

With the 'Compare' feature, you can generate side-by-side comparisons that let you not only spot regressions, but easily identify what caused them:

- Compare the same page at different points in time

- Compare two versions of the same page – for example, one with ads and one without

- Understand which metrics got better or worse

- Identify which common requests got bigger/smaller or slower/faster

- Spot any new or unique requests – such as JavaScript and images – and see their impact on performance

Along the way, we've also made it much more intuitive for you to drill down into your detailed synthetic test results. Let's take a look...

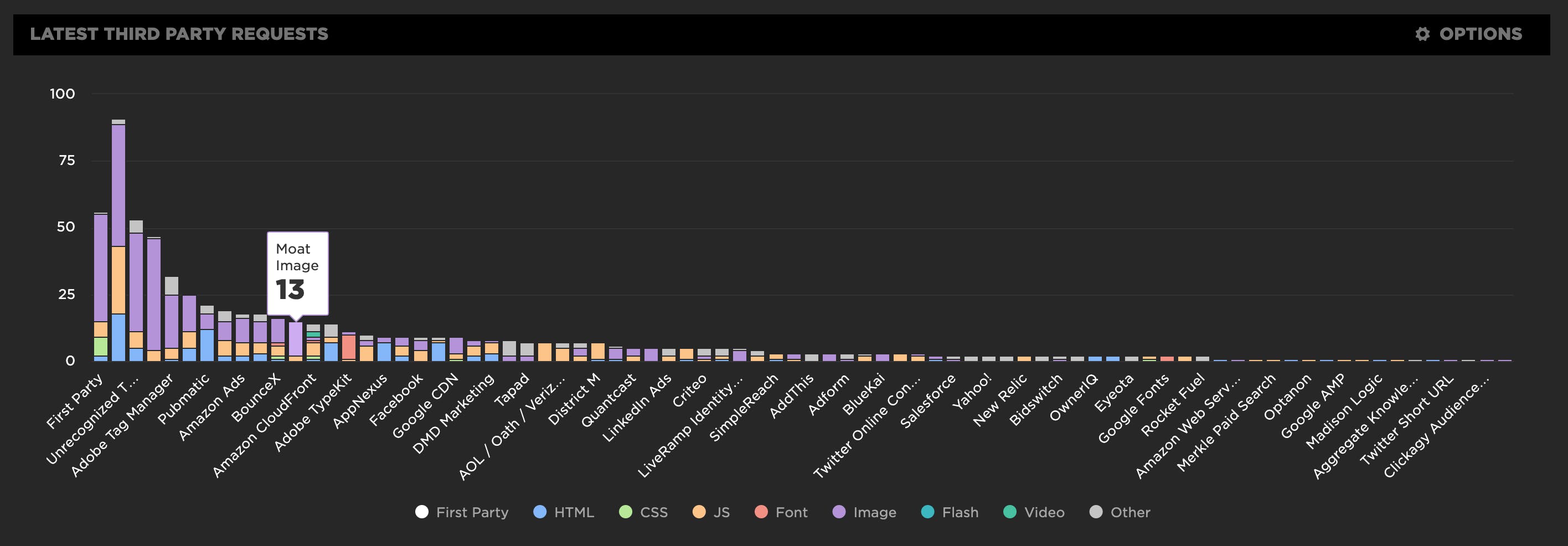

New! Tracking first- and third-party request groups

Getting visibility into the impact that known third parties have on the user experience has long been a focus in our community. There are some great tools out there – like 3rdParty.io from Nic Jansma and Request Map from Simon Hearne – which give us important insight into the complexity involved in tracking third-party content.

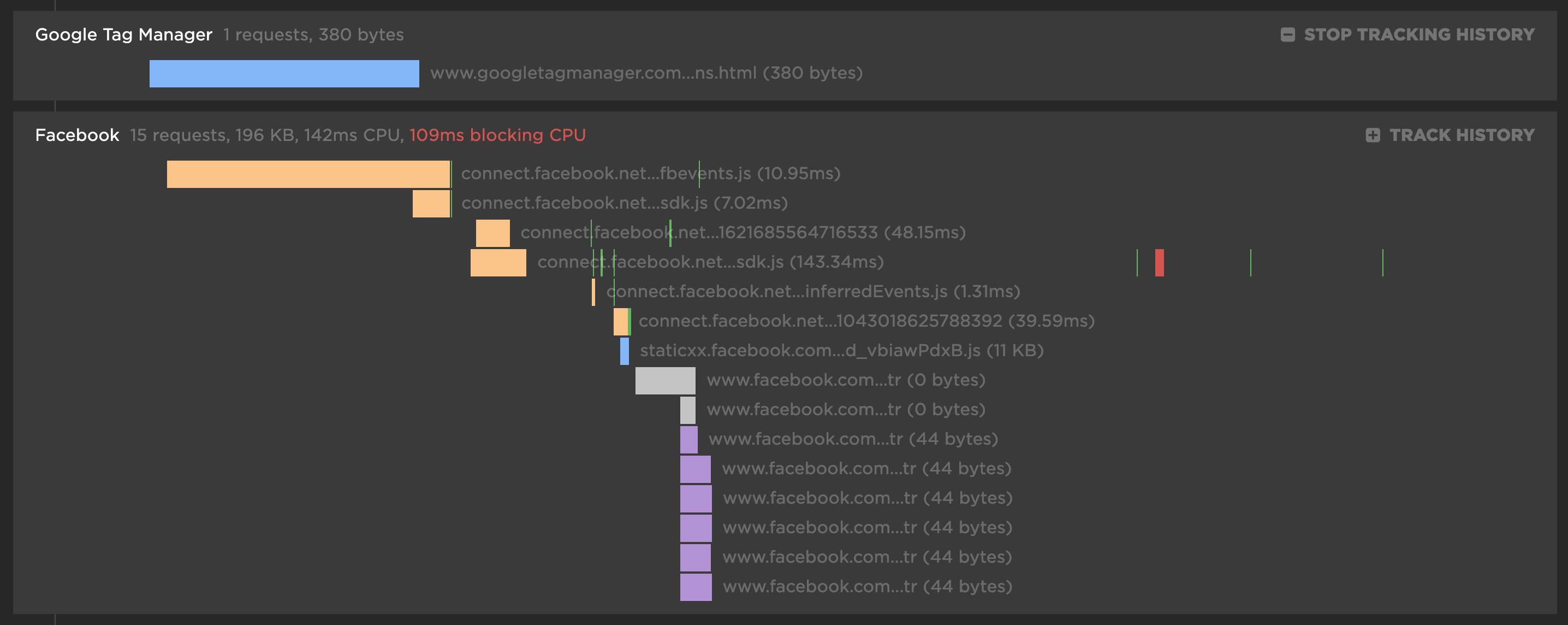

When we released our re-imagined Third Party Dashboard last year, we were excited to be providing site owners with another great tool for managing the unmanageable. Among other things, we took an approach that included:

- grouping requests,

- exposing Long Tasks attributed to third parties, and

- tracking blocking CPU time.

This provided even more insight into the different ways JavaScript could be causing real headaches for users.

We received a lot of feedback from our customers, who loved the new third-party functionality but REALLY wanted to see similar functionality for their "first party" content as well. We heard this message loud and clear, and today we're happy to announce a few changes to our Synthetic monitoring tool that address this need while preserving the functionality you already know and love.

New! User Happiness metric, CI plugin, and an inspiring third-party success story

Here at SpeedCurve, the past few months have found us obsessing over how to define and measure user happiness. We've also been scrutinizing JS performance, particularly as it applies to third parties. And as always, we're constantly working to find ways to improve your experience with using our tools. See below for exciting updates on all these fronts.

As always, we love hearing from you, so please send your feedback and suggestions our way!

Third party blame game

Our third party metrics and dashboard have had an exciting revamp. With new metrics like blocking CPU, you can now see exactly who is really to blame for a crappy user experience. We've also given you the ability to monitor individual third parties over time and create performance budgets for them.

It's not you, it's me

Or is it really you, and not me? We now automatically group all the requests in our third party waterfall chart, letting you easily identify all the third party services used on your website.

For each third party, you get the number of requests and size for each content type. There's also a first party comparison you can toggle on/off to see what proportion of your requests come from first party vs third party.

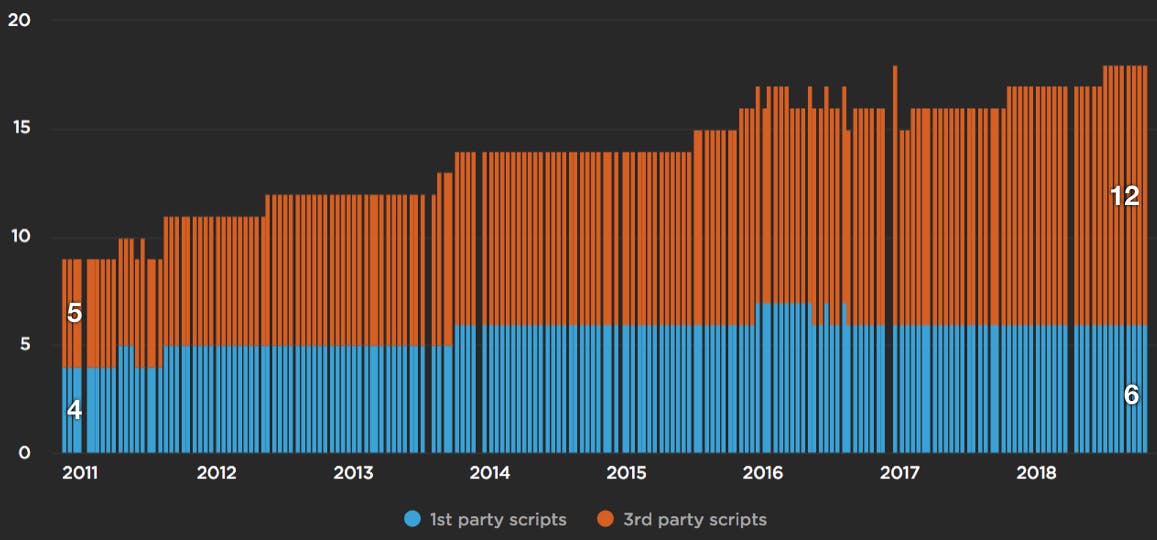

JavaScript growth and third parties

JavaScript is the main cause for making websites slow. Ten years ago it was network bottlenecks, but the growth of JavaScript has outpaced network and CPU improvements on today's devices. In the chart below, based on an analysis from the HTTP Archive, we see the number of requests has increased for both first and third party JavaScript since 2011.

UX Focus for Waterfalls and Third Parties

At SpeedCurve, we want to help designers and developers have better insight into the user experience they're delivering. For websites, this means understanding when the critical parts of the page render and what might be blocking rendering.

We've redesigned our waterfall chart to really highlight the relationship between the assets on the page and their affect on the user experience. Now as you move you mouse over the waterfall chart we show you exactly what a user is seeing at that millsecond while the page loads. This makes it much easier to identify any Javascript or CSS that might be blocking the page from rendering. I recently used this new combined waterfall and filmstrip view to identify a common issue with hero images being delayed.