Downtime vs slowtime: Which costs you more?

Comparing site outages to page slowdowns is like comparing a tire blowout to a slow leak. One is big and dramatic. The other is quiet and insidious. Either way, you end up stranded on the side of the road.

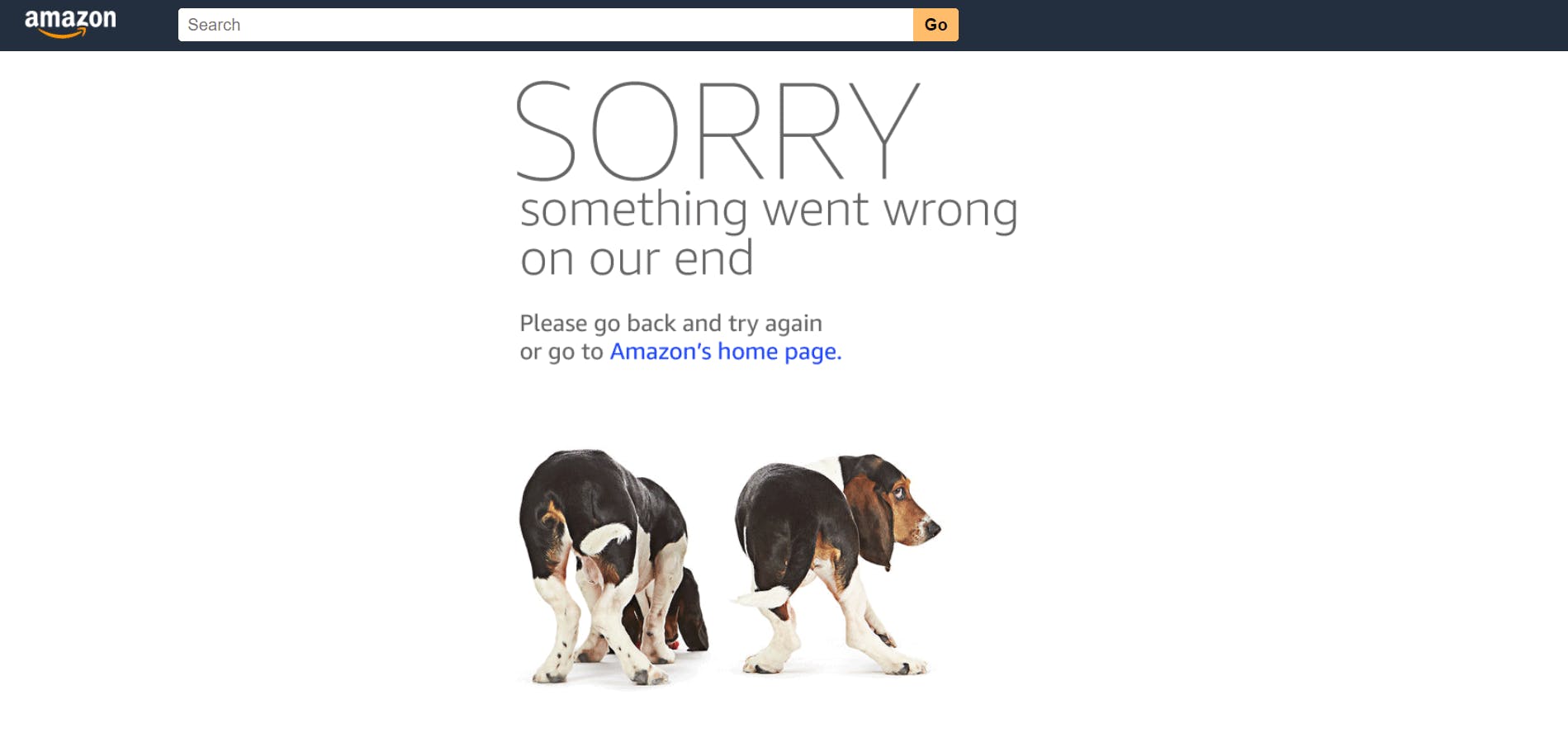

Downtime is horrifying for any company that uses the web as a vital part of its business (which is to say, most companies). Some of you may remember the Amazon outage of 2013, when the retail behemoth went down for 40 minutes. The incident made headlines, largely because those 40 minutes were estimated to have cost the company $5 million in lost sales.

Downtime makes headlines:

- 2015 – 12-hour Apple outage cost the company $25 million

- 2016 – 5-hour outage caused an estimated loss of $150 million for Delta Airlines

- 2019 – 14-hour outage cost Facebook an estimated $90 million

It's easy to see why these stories capture our attention. These are big numbers! No company wants to think about losing millions in revenue due to an outage.

Page slowdowns can cause as much damage as downtime

While Amazon and other big players take pains to avoid outages, these companies also go to great effort to manage the day-to-day performance – in terms of page speed and user experience – of their sites. That’s because these companies know that page slowdowns can cause at least as much damage as downtime.

NEW! Synthetic test agent updates: Chrome, Firefox and Lighthouse

This month, we've made some updates to our synthetic testing agents. In addition to upgrading the underlying operating system, we've added support for:

- Lighthouse 12.3.0 (previously 10.4.0)

- Chrome 133 (previously 126)

- Firefox 135 (previously 128)

Performance Hero: Sergey Chernyshev

We often hear how special, generous, and supportive the web performance community is. This didn't happen overnight. This month, we're excited to recognize someone who has been a huge part of creating the community culture we enjoy today: Sergey Chernyshev.

Whether answering questions on social media, helping someone with a proposal for a conference talk, or simply being welcoming and kind to newcomers, webperf folks are some of the most generous people you could ever hope to find. There are so many folks out there who are organizing, educating, evangelizing, and building great tooling in an effort to improve user experience on the web. Sergey has been doing all of those things earlier and longer than almost everyone!

Six things that slow down your site's UX (and why you have no control over them)

Have you ever looked at the page speed metrics – such as Start Render and Largest Contentful Paint – for your site in both your synthetic and real user monitoring tools and wondered "Why are these numbers so different?"

Photo by Freepik

Part of the answer is this: You have a lot of control over the design and code for the pages on your site, plus a decent amount of control over the first and middle mile of the network your pages travel over. But when it comes to the last mile – or more specifically, the last few feet – matters are no longer in your hands.

Your synthetic testing tool shows you how your pages perform in a clean lab environment, using variables – such as browser, connection type, even CPU power – that you've selected.

Your real user monitoring (RUM) tool shows you how your pages perform out in the real world, where they're affected by a myriad of variables that are completely outside your control.

In this post we'll review a handful of those performance-leaching culprits that are outside your control – and that can add precious seconds to the amount of time it takes for your pages to render for your users. Then we'll talk about how to use your monitoring tools to understand how your real people experience your site.

Page bloat update: How does ever-increasing page size affect your business and your users?

The median web page is 8% bigger than it was just one year ago. How does this affect your page speed, your Core Web Vitals, your search rank, your business, and most important – your users? Keep scrolling for the latest trends and analysis.

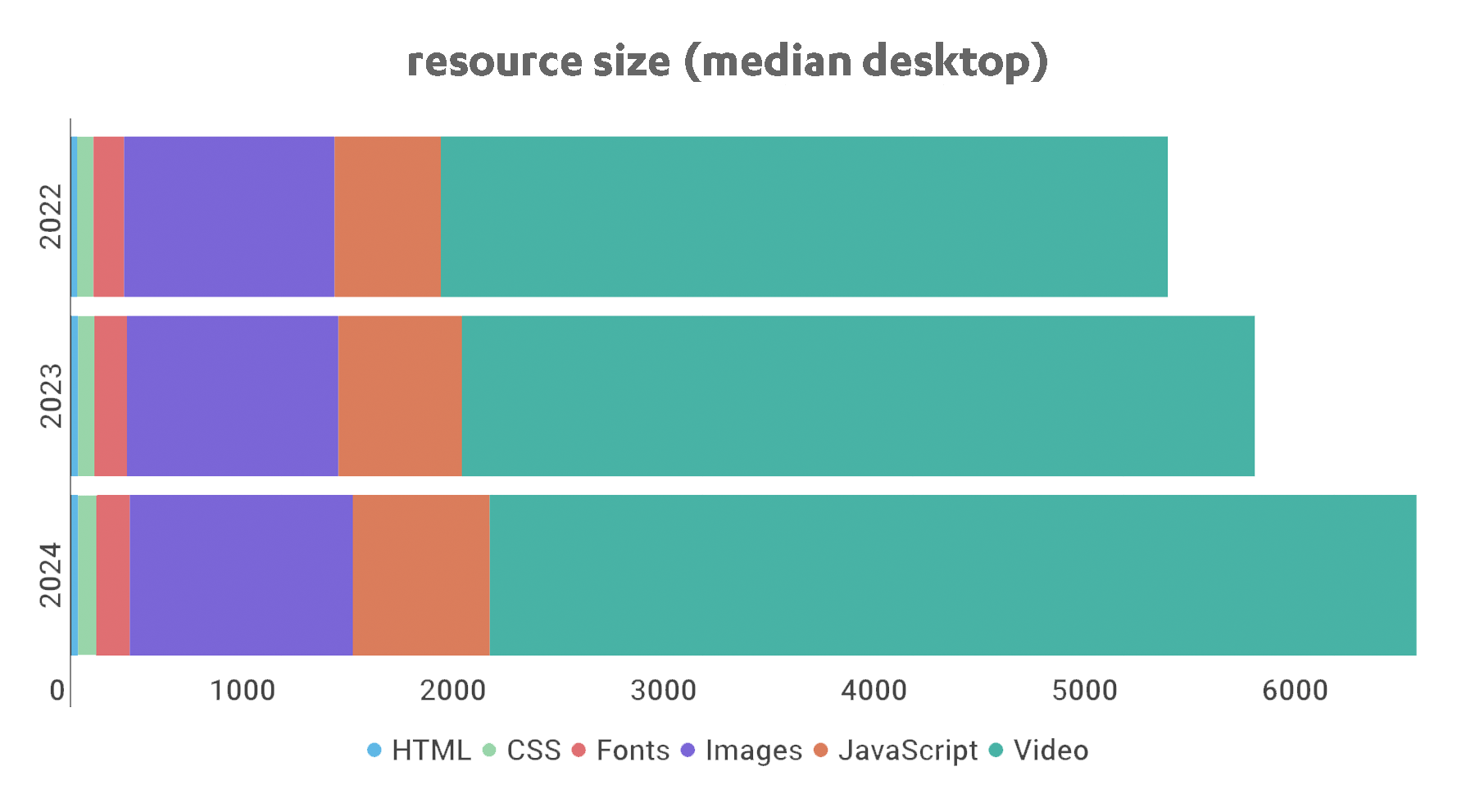

For almost fifteen years, I've been writing about page bloat, its impact on site speed, and ultimately how it affects your users and your business. You might think this topic would be exhausted by now, but every year I learn new things – beyond the overarching fact that pages keep getting bigger and more complex, as you can see in this chart, using data from the HTTP Archive:

In this post, we'll cover:

- How much pages have grown over the past year

- How page bloat hurts your business and – at the heart of everything – your users

- How page bloat affects Google's Core Web Vitals (and therefore SEO)

- If it's possible to have large pages that still deliver a good user experience

- Page size targets

- How to track page size and complexity

- How to fight regressions

Performance Hero: Annie Sullivan

Let's kick off the new year by celebrating someone who has not just had a huge impact on web performance over the past few years, but who has even more exciting stuff in the works for the future: Annie Sullivan!

Annie leads the Chrome Speed Metrics team at Google, which has arguably had the most significant impact on web performance of the past decade. We've gotten to know Annie through frequent discussions, feedback sessions, and hallway talks at various events. Most recently we caught her closing keynote at performance.now() in November.

Annie leads the Chrome Speed Metrics team at Google, which has arguably had the most significant impact on web performance of the past decade. We've gotten to know Annie through frequent discussions, feedback sessions, and hallway talks at various events. Most recently we caught her closing keynote at performance.now() in November.

Speaking from experience, driving change at scale from within a large organization can be very challenging. Annie and her team navigate this arduous task with true passion for web performance and for improving the user experience. Read on for a great recap of a recent discussion with Annie and just a few of the highlights that make her a true performance hero.

Our 10 most popular web performance articles of 2024

We love writing articles and blog posts that help folks solve real web performance and UX problems. Here are the ones you loved most in 2024. (The number one item may surprise you!)

Some of these articles come from our recently published Web Performance Guide – a collection of evergreen how-to resources (written by actual humans!) that will help you master website monitoring, analytics, and diagnostics. The rest come from this blog, where we tend to publish industry news and analysis.

Regardless of the source, we hope you find these pieces useful!

ICYMI: Some of our most exciting product updates of 2024!

Every year feels like a big year here at SpeedCurve, and 2024 was no exception. Here's a recap of product highlights designed to make your performance monitoring even better and easier!

Our biggest achievements this year have centred on making it easier for you to:

- Gather more meaningful real user monitoring (RUM) data

- Get actionable insights from Core Web Vitals

- Simplify your synthetic testing

- Get expert performance coaching when and how you need it

Keep reading to learn more...

Performance Hero: Pat Meenan

This month, we celebrate everything that OG performance hero Pat Meenan has done – and continues to do – for the web performance community.

When we started the Performance Hero series earlier this year, we had an idea of the types of folks in our community we wanted to acknowledge:

- People who are making a difference in web performance

- People who are humble

- People who give without expectation

- People who don't necessarily crave the spotlight

When looking at these attributes – for a lot of us who have been around this space for more years than we care to mention – it's hard not to think about everyone's favorite web performance OG: Pat Meenan. This month, we celebrate all that Pat has done and continues to do for web performance.

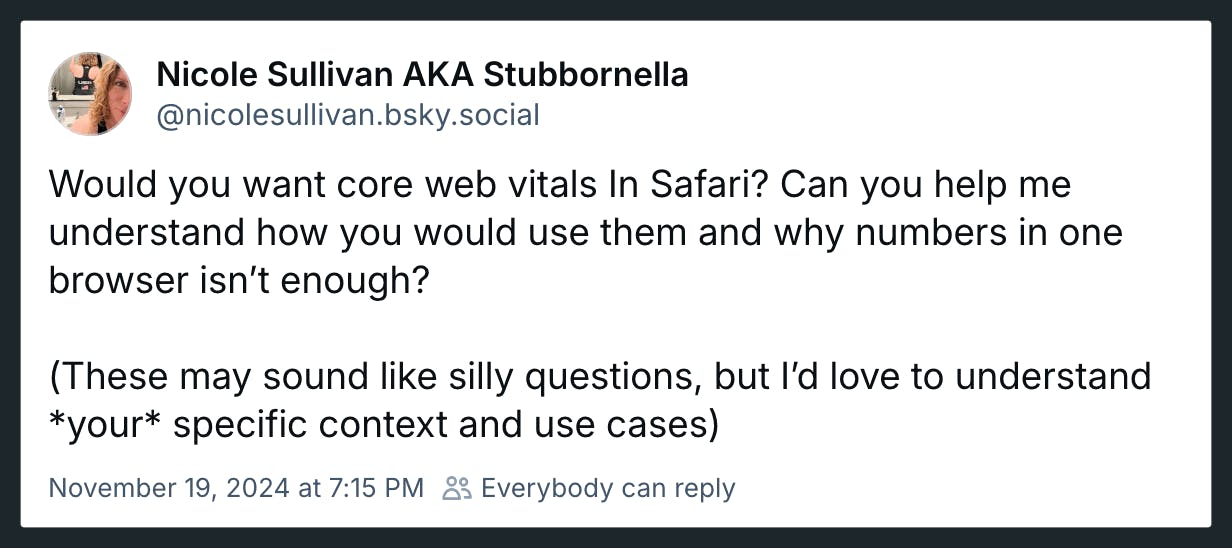

A Holiday Wish: Core Web Vitals in Safari

Did you know that key performance metrics – like Core Web Vitals – aren't supported in Safari? If that's news to you, you're not alone! Here's why that is... and what we and the rest of the web performance community are doing to fix it.

Somebody pinch me. Seeing this post and the resulting thread gives me great hope.

Nicole Sullivan (aka Stubbornella, WebKit Engineering Manager at Apple, and OG web performance evangelist) isn't making promises or dangling a carrot. Nonetheless, it's evidence of the willingness for some public discussion on a topic that's been exhaustively discussed in our community for years. Nicole's post has gotten some great responses from many leaders in our community, hopefully shaping a strong use case for future WebKit support for Core Web Vitals.

(If you're new to performance, Core Web Vitals is a set of three metrics – Largest Contentful Paint, Cumulative Layout Shift, and Interaction to Next Paint – that are intended to measure the rendering speed, interactivity, and visual stability of web pages.)

In this post, I'm going to highlight some of the discussion around the topic of Core Web Vitals and Safari, which was a major theme coming out of the recent web performance marathon in Amsterdam that included WebPerf Days, performance.sync(), and the main event, performance.now().