Are your retail landing pages killing conversions?

You've probably started to notice retail campaigns for Halloween, Thanksgiving, and even Christmas! Online campaigns are pricey, so the landing page should be the MOST scrutinized page of your site — but too often it's an afterthought.

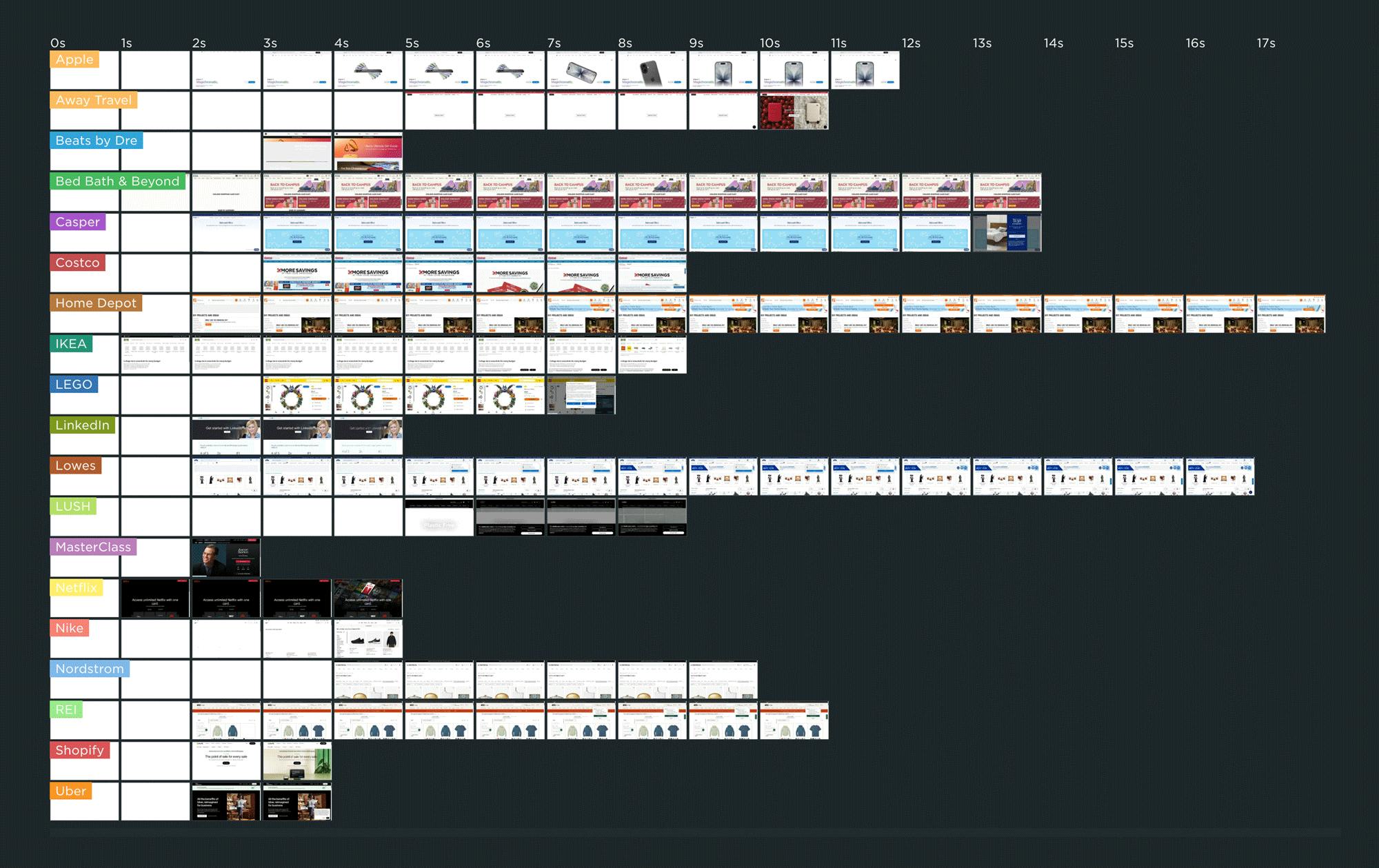

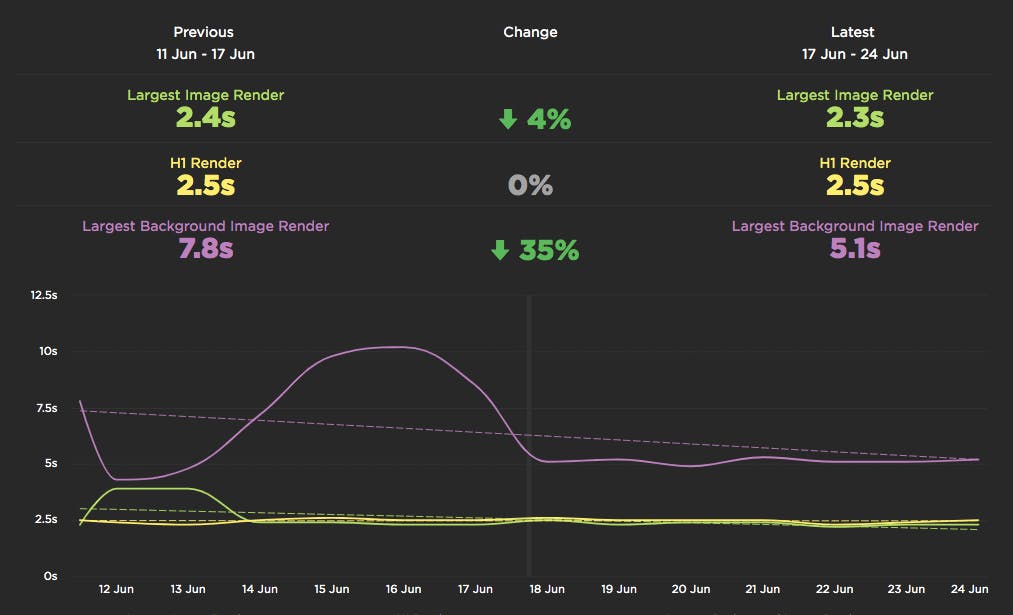

I tracked the rendering times of campaign landing pages for leading retailers to see how they compare — who's fast, who's slow, and what's causing their page speed bottlenecks.

As you can see in this competitive benchmarking leaderboard, rendering speeds varied across pages ranging from Apple to Uber. The fastest campaign page — which belonged to IKEA.com — started to render in about 1 second, which is great to see.

But for many of the other pages, it was common to wait 3 or 4 seconds — or longer! — to see meaningful content.

In this post, we'll cover:

- Why the speed of your campaign landing pages matters... possibly even more than most of the other pages on your site

- Why performance issues on campaign landing pages often go unnoticed

- Common performance issues on landing pages

- A deep dive into the landing page for one of the sites I tracked

- Who should be responsible for the performance of the landing pages on your site?

- What you can do to make sure your campaign landing pages stay fast

How do Long Animation Frames affect user behavior?

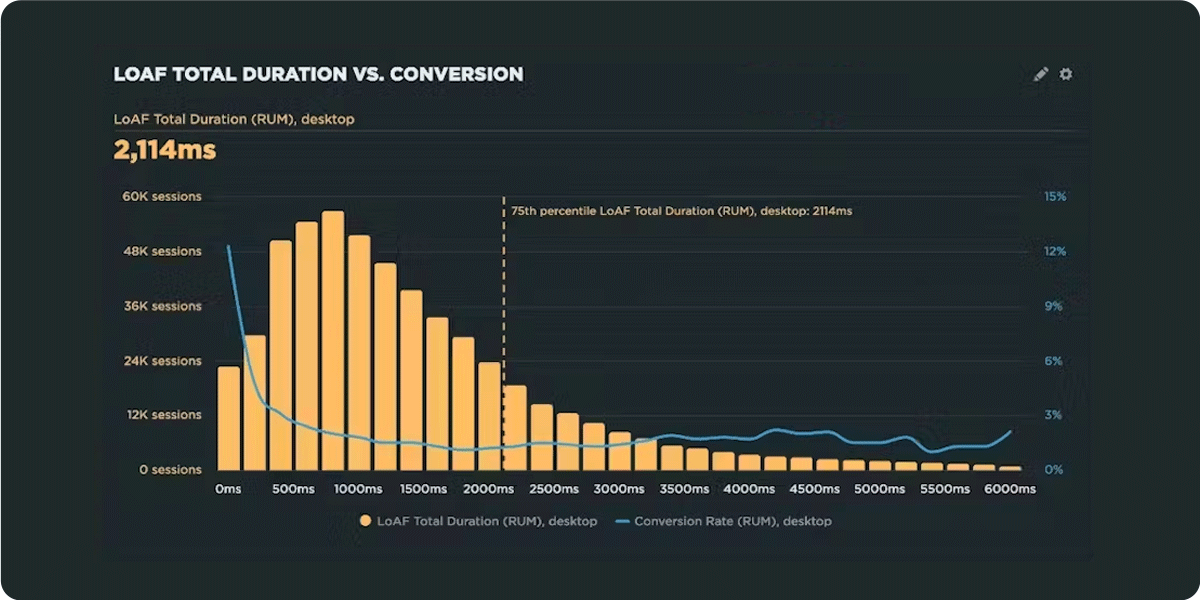

What's the point of a performance metric that doesn't align with user behavior – and ultimately business outcomes? Looking at four different retail sites, we compared each LoAF metric for desktop and mobile and correlated it to conversion rate. We saw some surprising trends alongside some expected patterns.

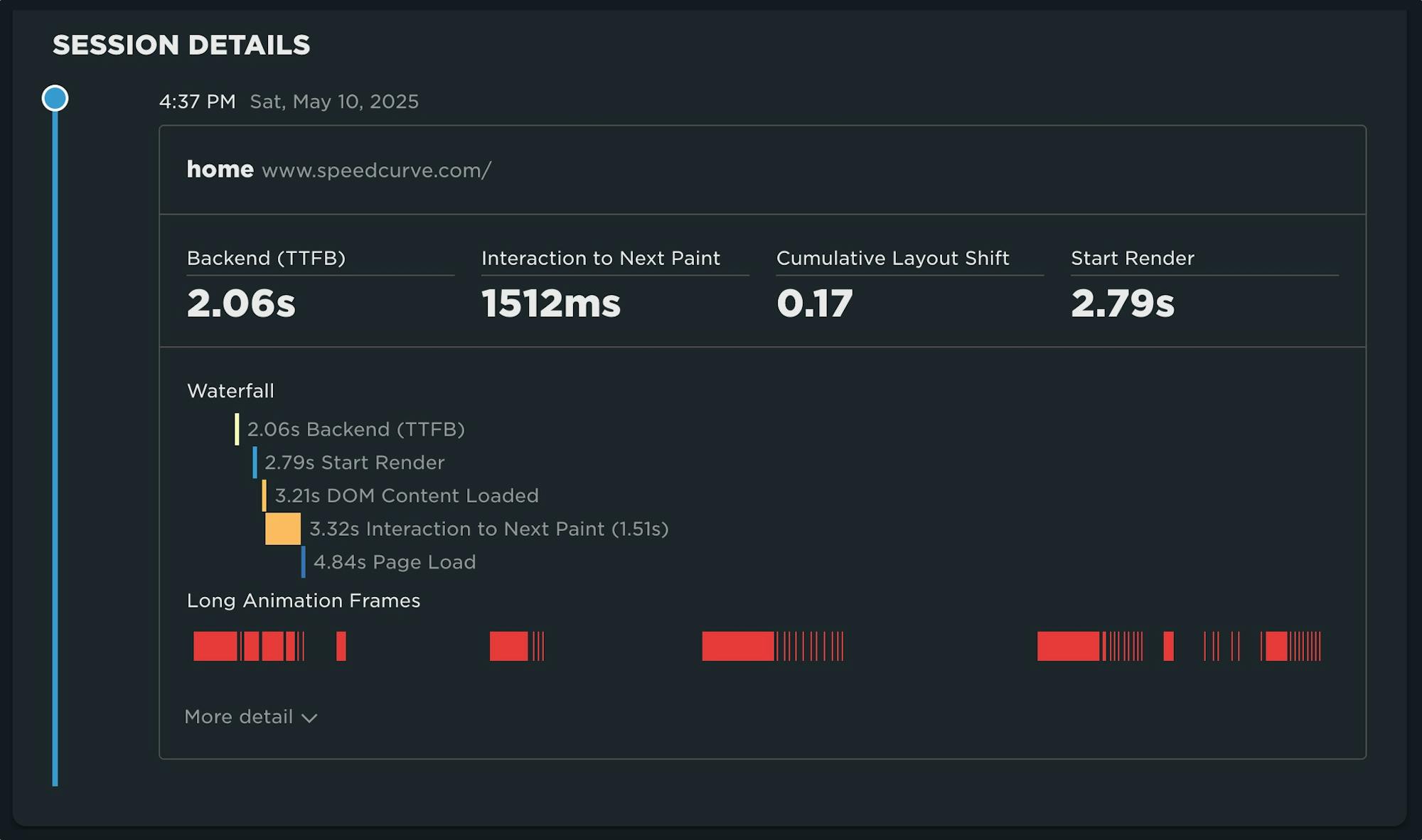

We recently shipped support for Long Animation Frames (LoAF). We're buzzing with excitement about having better diagnostic capabilities, including script attribution for INP and our new experimental metric, Total Blocking Duration (TBD).

While Andy has gone deep in the weeds on LoAF, in this post let's put the new set of metrics to the test and see how well they reflect the user experience. We'll look at real-world data from real websites and find an answer to the question: How do Long Animation Frames affect user behavior?

The Definitive Guide to Long Animation Frames (LoAF)

With Long Animation Frames (commonly referred to as LoAF, pronounced 'LO-aff') we finally have a way to understand the impact of our code on our visitors' experiences.

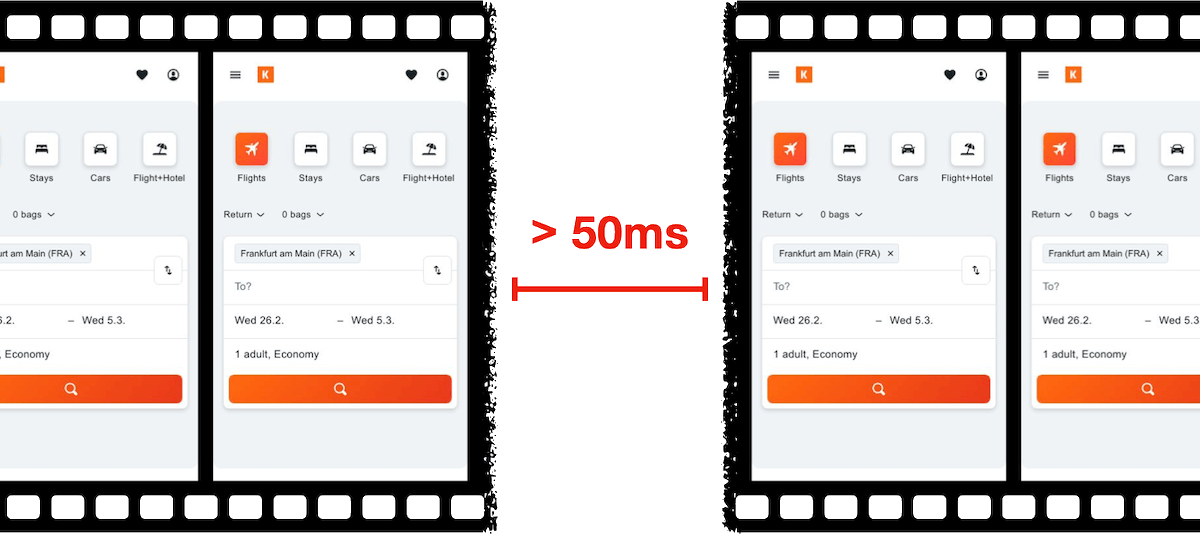

Long Animation Frame – a frame that took longer then 50ms from its start to when it started painting

LoAF allows us to understand how scripts and other tasks affect both hard and soft navigations, as well as how scripts affect interactions. Using the data LoAF provides, we can identify problem scripts and target changes that improve our visitors' experience. We can also finally start to quantify the impact of third-party scripts as they execute in our visitors' browsers.

Keep reading to learn:

- Why animation frame rate matters

- Anatomy of a Long Animation Frame

- Key LoAF milestones and what we can do with milestone data

- Script attribution (and why script details might sometimes be unavailable)

- How to match script data to Interaction to Next Paint, including sub-parts

- How to capture LoAF entries

- Getting started with LoAF

- LoAF support in SpeedCurve

NEW! Monitor Long Animation Frames and get to the bottom of your JavaScript issues

CPU consumption by the browser is one of the main causes – if not the number one cause – of a poor user experience. The primary culprit? JavaScript execution. Now you can use SpeedCurve to monitor Long Animation Frames (LoAFs) and fix the third parties and other scripts that are hurting your page speed.

Until recently, we've had little evidence from the field that definitively attributes the root cause of rendering delays. While JavaScript Long Tasks gave us a good indication that there were blocking tasks affecting metrics such as Interaction to Next Paint and Largest Contentful Paint, there was no way to attribute the work or understand how it was ultimately affecting rendering.

Fortunately, we've gotten a lot of help from Chrome in improving the attribution – and ultimately the actionability – of the data we collect in the field with RUM. The introduction of the Long Animation Frames API (LoAF) not only gives us better methods for understanding what's happening on the browser's main thread, in some cases it also gives us attribution to both first- and third-party scripts that occur during a LoAF.

This has been a highly anticipated addition to SpeedCurve, which is available for all our RUM users today. This post covers what's new in the product and points you to a few new resources to help you get up to speed on all things related to LoAF.

Our 10 most popular web performance articles of 2024

We love writing articles and blog posts that help folks solve real web performance and UX problems. Here are the ones you loved most in 2024. (The number one item may surprise you!)

Some of these articles come from our recently published Web Performance Guide – a collection of evergreen how-to resources (written by actual humans!) that will help you master website monitoring, analytics, and diagnostics. The rest come from this blog, where we tend to publish industry news and analysis.

Regardless of the source, we hope you find these pieces useful!

Five ways cookie consent managers hurt web performance (and how to fix them)

Cookie consent popups and banners are everywhere, and they're silently hurting the speed of your pages. Learn the most common problems – and their workarounds – with measuring performance with content manager platforms in place.

I've been spending a lot of time looking at the performance of European sites lately, and have found that the consent management platforms (CMPs) consistently create a false reality for folks when trying to understand performance using synthetic monitoring. Admittedly, this is not a new topic, but I feel it's important enough that it warrants another PSA.

In this post, I will cover some of the issues related to measuring performance with CMPs in place and provide some resources for scripting around consent popups in SpeedCurve.

UPDATE: Bookmark and compare synthetic tests

One of the huge benefits of tracking web performance over time is the ability to see trends and compare metrics. Last year we added new functionality that makes it easy for you to bookmark and compare different synthetic tests in your test history. We recently added some additional enhancements to make comparing tests even easier.

With the 'Compare' feature, you can generate side-by-side comparisons that let you not only spot regressions, but easily identify what caused them:

- Compare the same page at different points in time

- Compare two versions of the same page – for example, one with ads and one without

- Understand which metrics got better or worse

- Identify which common requests got bigger/smaller or slower/faster

- Spot any new or unique requests – such as JavaScript and images – and see their impact on performance

Along the way, we've also made it much more intuitive for you to drill down into your detailed synthetic test results. Let's take a look...

First Input Delay: How vital is it?

We’ve been pretty vocal about Core Web Vitals since Google announced this initiative last spring. We love the idea of having a lean, shared set of metrics that we can all rally around – not to mention having a broader conversation about web performance that includes teams throughout an organization.

For many site owners, the increased focus on Core Web Vitals is driven by the fact that Google will be including them as a factor in search ranking in May 2021. Other folks are more interested in distilling the extremely large barrel of performance metrics into an easily digested trinity of guidelines to follow in order to provide a delightful user experience.

We’ve had some time to evaluate and explore these metrics, and we're committed to transparently discussing their pros and cons.

The purpose of this post is to explore First Input Delay (FID). This metric is unique among the three Web Vitals in that it is can only be measured using real user monitoring (RUM), while the other two (Largest Contentful Paint and Cumulative Layout Shift) can be measured using both RUM and synthetic monitoring.

In this post we'll cover:

- What is FID?

- What does FID look like across the web?

- The importance of measuring user interactions

- How JavaScript affects user behavior

- Suggestions for how you can look at FID in relation to your other key metrics

Let's dig in!

Are your JavaScript long tasks frustrating users?

When it comes to long JavaScript tasks, how long is too long?

The general consensus within the web performance community is that any JS scripting task that takes more than 50ms to execute can affect a user's experience. When the browser's main thread hits max CPU for more than 50ms, a user starts to notice that their clicks are delayed and that scrolling the page has become janky and unresponsive. Batteries drain faster. People rage click or go elsewhere.

No one plans to make a page or web app that sucks the life out of their users' devices, so it's super important to monitor the effect your JS is having. (Yes... I'm looking at you, front-end JS libraries and third-party ads!)

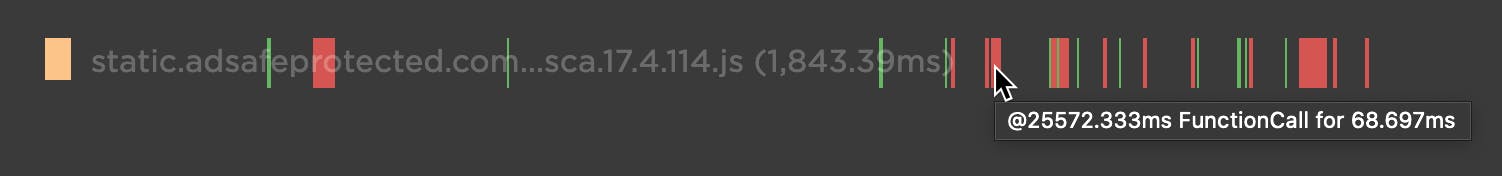

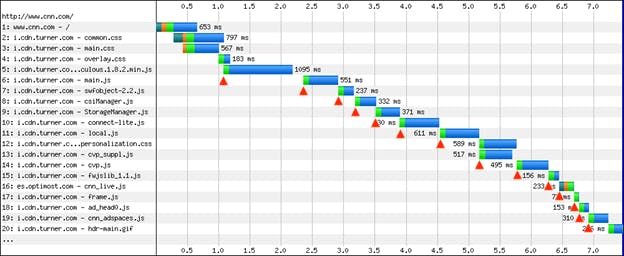

Our waterfall chart shows every long task over 50ms as a red block.

New JavaScript CPU metrics

We've recently added a bunch of new JavaScript CPU metrics that help you understand if your scripts are blocking the main thread and getting in the way of a super smooth experience for your users:

- Long Tasks – The total time of all your JS tasks over 50ms added together – from navigation start till the page is fully loaded.

- Number of Long Tasks – The total number of individual JS long tasks.

- Longest Task – There's a lot that can hide in the details of long task time. One really long task that frustrates your user is an order of magnitude worse than a bunch of long tasks that are just over the 50ms threshold each. For that reason, we track not only the number of long tasks in a page but also the duration of the longest task in the page. (We've seen individual JS long tasks taking over 1,000ms! That's a whole second where the browser just locked up, sat on its hands, and refused to do anything while a poor user mashed on the keyboard and wiggled the mouse wondering what happened.)

New! Tracking first- and third-party request groups

Getting visibility into the impact that known third parties have on the user experience has long been a focus in our community. There are some great tools out there – like 3rdParty.io from Nic Jansma and Request Map from Simon Hearne – which give us important insight into the complexity involved in tracking third-party content.

When we released our re-imagined Third Party Dashboard last year, we were excited to be providing site owners with another great tool for managing the unmanageable. Among other things, we took an approach that included:

- grouping requests,

- exposing Long Tasks attributed to third parties, and

- tracking blocking CPU time.

This provided even more insight into the different ways JavaScript could be causing real headaches for users.

We received a lot of feedback from our customers, who loved the new third-party functionality but REALLY wanted to see similar functionality for their "first party" content as well. We heard this message loud and clear, and today we're happy to announce a few changes to our Synthetic monitoring tool that address this need while preserving the functionality you already know and love.

Six web performance resolutions for the new year

For the past two years, the performance.now() conference has been the most valuable performance event I've attended. So valuable, in fact, that I've made some of the talks the cornerstone of this list of performance resolutions for 2020. I'd love to know how many – if any – of these are on your list. As always, I'd love people's feedback!

New! User Happiness metric, CI plugin, and an inspiring third-party success story

Here at SpeedCurve, the past few months have found us obsessing over how to define and measure user happiness. We've also been scrutinizing JS performance, particularly as it applies to third parties. And as always, we're constantly working to find ways to improve your experience with using our tools. See below for exciting updates on all these fronts.

As always, we love hearing from you, so please send your feedback and suggestions our way!

Getting started with web performance? Here's what you need to focus on.

A while back, our friends at Shopify published this great case study, showing how they optimized one of their newer themes from the ground up – and how they worked to keep it fast. Inspired by that post, I wanted to dig a bit deeper into a few of the best practices they mentioned, which fall loosely into these three buckets:

- Analyze your pages – understand the critical rendering path and page composition.

- Create performance budgets and fight regression.

- Build a performance culture that embraces collaboration between design and dev.

Keep reading to learn how you can apply these best practices to your own site and give your pages a speed boost.

Measuring Jank and UX

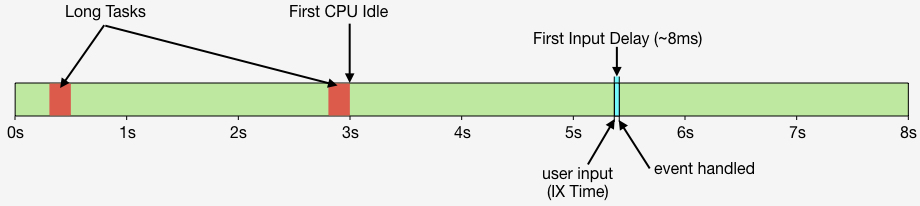

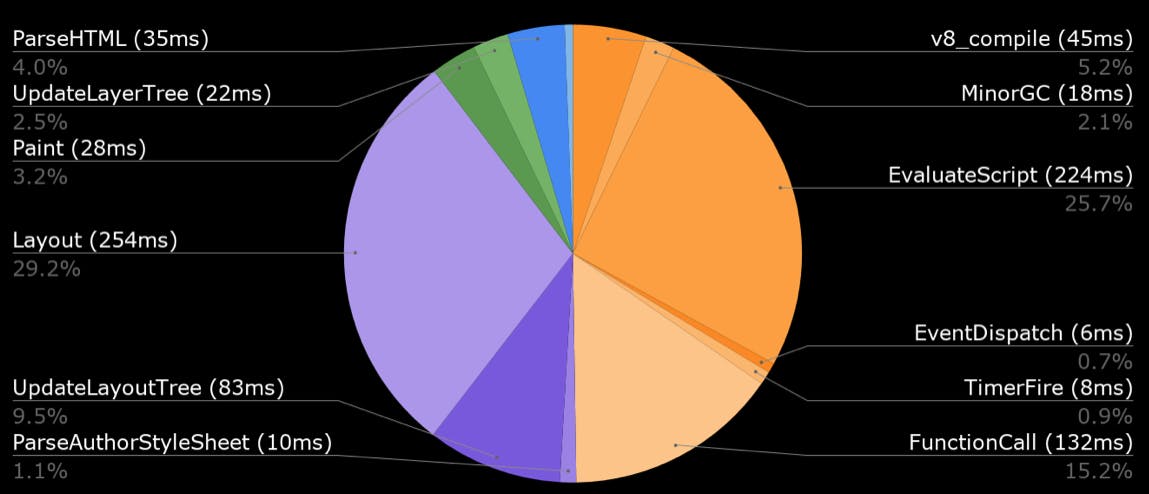

Ten years ago the network was the biggest problem when it came to making websites fast. Today, CPU is the main concern. This happened because networks got faster while JavaScript moved in the other direction growing 3x in size in the last six years. This growth is important because JavaScript consumes more CPU than all other browser activities combined. While JavaScript and other activities block the CPU, the browser can't respond to user input creating the sensation of a slow, jittery, or broken page, AKA "jank".

To help focus our attention on CPU, several new performance metrics have been defined and evangelized over the last year or three. In this post I'm going to focus on these:

- First CPU Idle measures when the page is no longer janky. Specifically, it is the first span of 5 seconds where the browser main thread is never blocked for more than 50ms after First Contentful Paint. A value of 2-4 seconds is typical.

- First Input Delay measures the gap between when a user interacts with the page (e.g, clicks or scrolls) and when the browser is able to act on that input. First Input Delay values are much lower - a good target is 10ms, but 25ms is common.

- First Interaction Time is when the first user input takes place. This varies widely depending on the type of site and page. A good search results page might have a low First Interaction Time because users scroll and click quickly. A media site might have a high First Interaction Time because users start reading content (headlines, stories) before interacting with the page. At SpeedCurve we call this "IX Time".

- Total Long Task CPU Time is the sum of all long tasks that occur in the page. A "long task" is a browser event that blocks the main thread for more than 50ms.

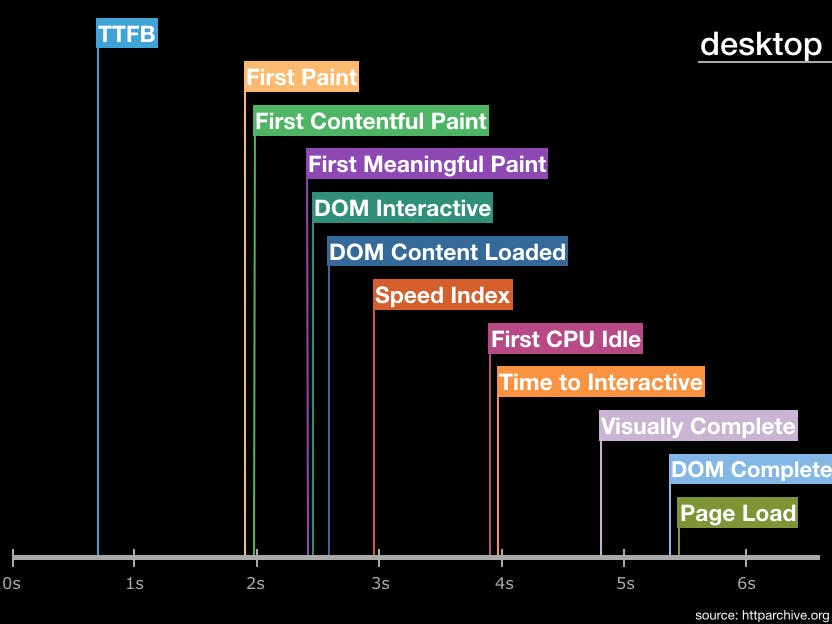

Here's a figure to help visualize these metrics.

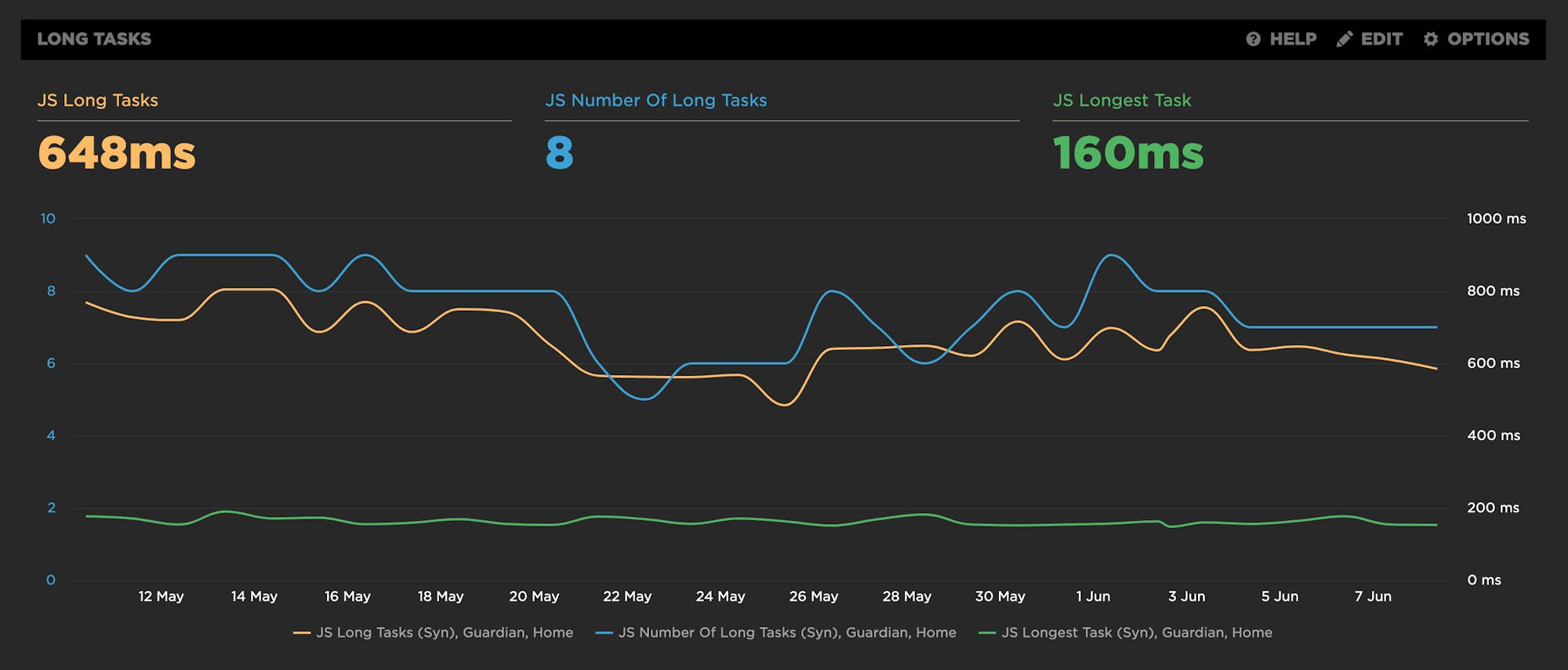

New LUX JavaScript Dashboards

As organizations work to improve performance for users around the world on slower networks and devices, the focus on JavaScript continues to grow. LUX's new JavaScript dashboards help to identify the problems and solutions for creating a fast, joyous user experience.

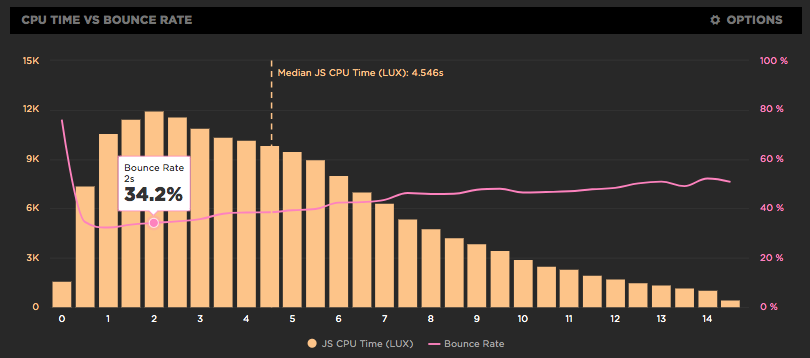

LUX is SpeedCurve's real user monitoring product. We launched it two years ago with four dashboards: Live, Users, Performance, and Design. Today we've added two more LUX dashboards: JavaScript and JS Errors. These new dashboards let you see the impact JavaScript has on your site and on your users with new metrics, including First CPU Idle and First Input Delay, and new features, such as correlation charts that show you how CPU time correlates with bounce rate.

JavaScript dominates browser CPU

Loading scripts asynchronously is critical for getting pages to render more quickly. We care about rendering because that's what users see; if rendering is slow users have a negative experience. But it's not just about what users see - how the site feels is also important. That's why we focus so much on CPU time. If the CPU is blocked, then browsers are delayed responding to user interactions like scrolling and clicking on links. In other words, the page feels janky. And what consumes the most CPU in browsers? You guessed it: JavaScript!

Preload scripts

In my previous post I talked about how loading scripts asynchronously reduces the impact of JavaScript resulting in a (much) faster user experience. But even when scripts are loaded async, the browser may still twiddle its thumbs for a second or more waiting for the first script to arrive. This delay can be decreased by using link rel=preload like this:

<link rel="preload" href="main.js" as="script">Load scripts async

This blog post has a simple conclusion: Load script asynchronously! Simple, and yet the reality is that most scripts are still loaded synchronously. Understanding the importance of loading scripts asynchronously might help increase adoption of this critical performance improvement, so we're going to walk through the evolution of async script loading starting way back in 2007. Here's what loading 14 scripts looked like in Internet Explorer 7:

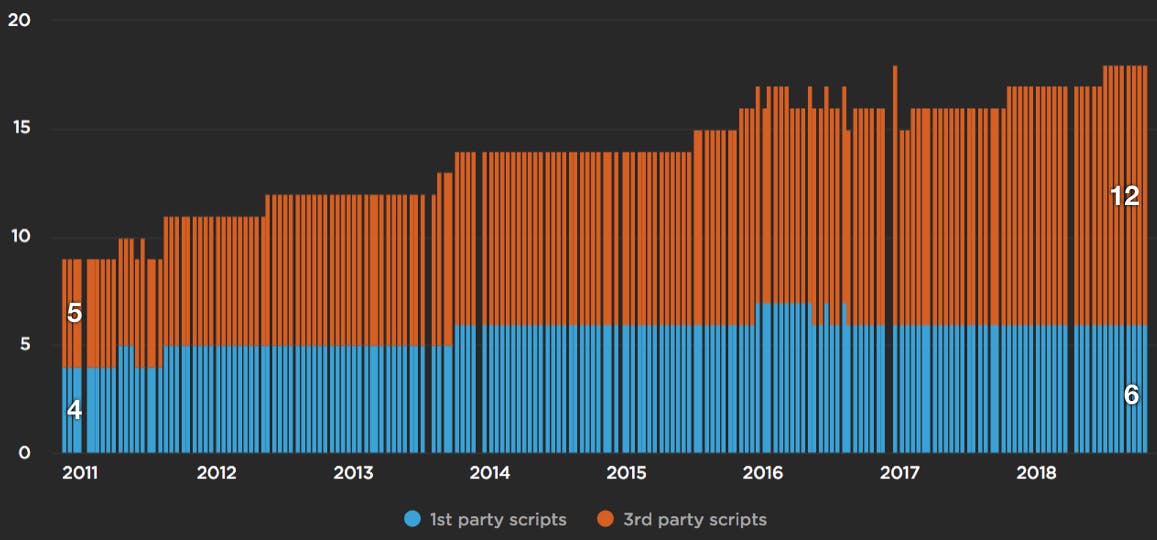

JavaScript growth and third parties

JavaScript is the main cause for making websites slow. Ten years ago it was network bottlenecks, but the growth of JavaScript has outpaced network and CPU improvements on today's devices. In the chart below, based on an analysis from the HTTP Archive, we see the number of requests has increased for both first and third party JavaScript since 2011.

Metrics from 1M sites

The number of performance metrics is large and increases every year. It's important to understand what the different metrics represent and pick metrics that are important for your site. Our Evaluating rendering metrics post was a popular (and fun) way to compare and choose rendering metrics. Recently I created this timeline of performance metric medians from the HTTP Archive for the world's top ~1.3 million sites: