July 2022 product update: Web Vitals support, more synthetic test agents & performance heat maps

It's been another busy month here at SpeedCurve! Check out our latest product updates below.

June 2022 product update: Performance recommendations on Vitals dashboard, RUM path filters & more

We've been busy here at SpeedCurve HQ! Here's a roundup of our recent product updates.

Sampling RUM: A closer look

Being able to set a sample rate in your real user monitoring (RUM) tool allows you to monitor your pages while managing your spending. It's a great option if staying within a budget is important to you. With the ability to sample real user data, comes this question...

"What should my RUM sample rate be?"

This frequently asked question doesn't have a simple answer. Refining your sample rate can be hit or miss if you aren’t careful. In a previous post, I discussed a few considerations when determining how much RUM data you really need to make informed decisions. If you sample too much, you may be collecting a lot of data you may never use. On the other hand, if you sample too little, you risk creating variability in your data that is hard to trust.

In this post, we are going to do a bit of research and let the data speak for itself. I took a look at the impact of sampling at various levels for three t-shirt sized companies (Small, Medium, Large) with the hope of providing some guidance for those of you considering sampling your RUM data.

In this post, I'll cover:

- Methodology

- Key findings

- Considerations

- Recommendations

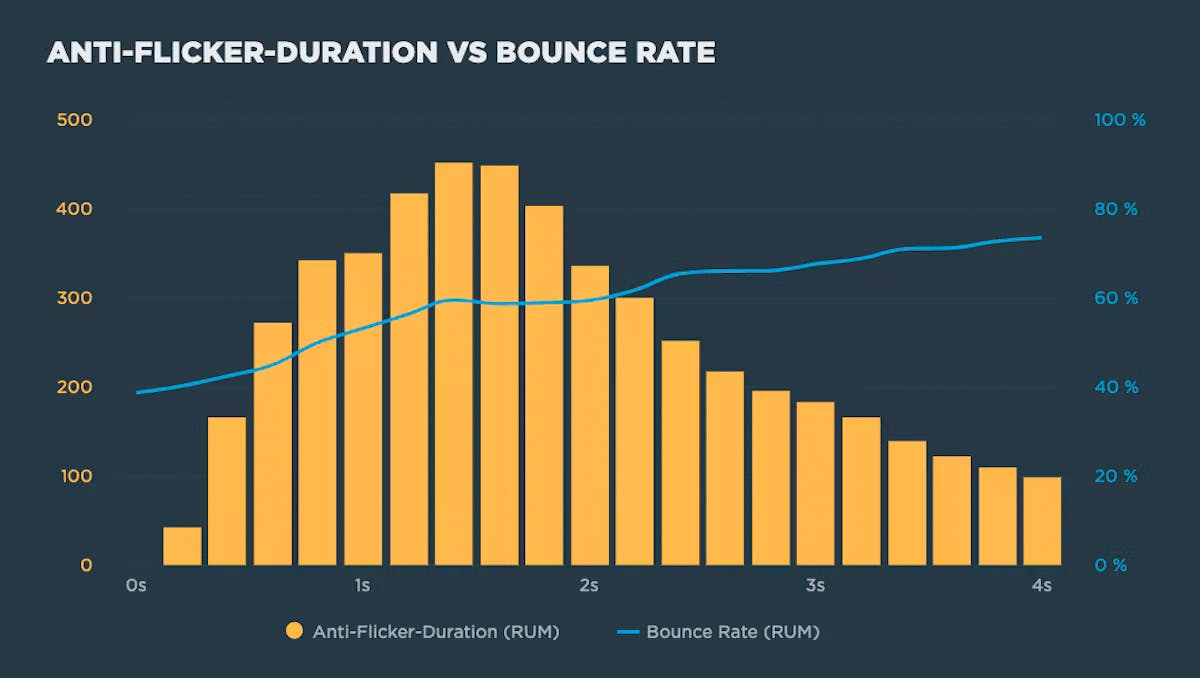

Understanding the performance impact of anti-flicker snippets

Experimentation tools that use asynchronous scripts – such as Google Optimize, Adobe Target, and Visual Web Optimizer – recommend using an anti-flicker snippet to hide the page until they've finished executing. But this practice comes with some performance measurement pitfalls:

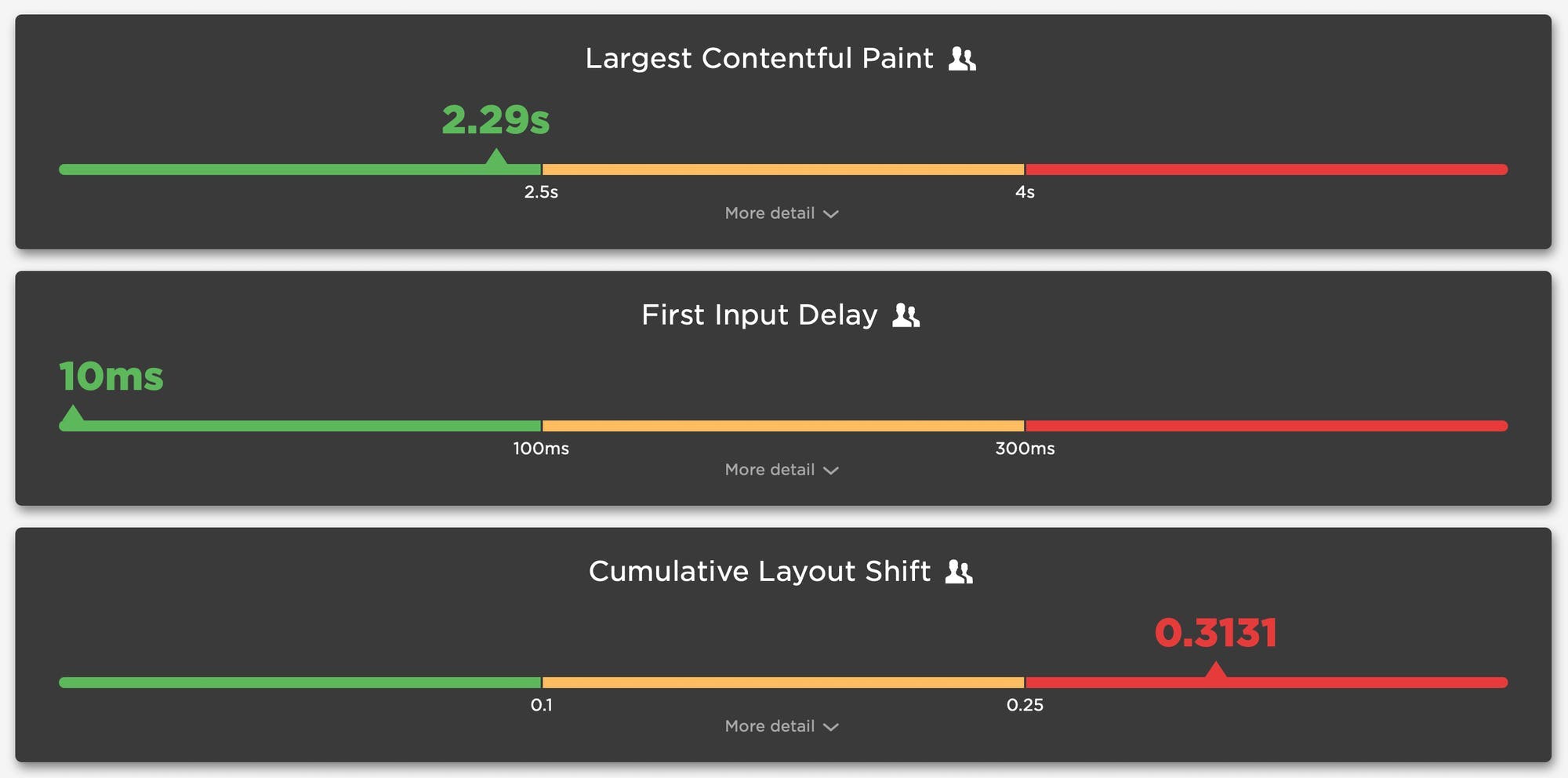

- Hiding the contents of the page can have a dramatic effect on the Web Vitals that measure visual experience, such as First Contentful Paint (FCP) and Largest Contentful Paint (LCP)

- Anti-flicker snippets can also affect Cumulative Layout Shift (CLS) and the synthetic equivalent of First Input Delay (FID), Total Blocking Time (TBT).

In this post we'll look at how anti-flicker snippets work, their impact on Web Vitals, and how to measure the delay they add to visitors' experience.

Industry page speed benchmarks (March 2022)

Page Speed Benchmarks is an interactive dashboard that lets you explore and compare web performance data for leading websites across several industries – from retail to media – over the past year. This dashboard is publicly available (meaning you don't need a SpeedCurve account to explore it) and is a treasure trove of meaningful data that you can use for your own research.

The dashboard allows you to easily filter by region, industry, mobile/desktop, fast/slow, and key web performance metrics, including Google's Core Web Vitals. (Scroll down to the bottom of this post for more testing details.)

At the time of writing this post, these were the home pages with the fastest Start Render times in key industries:

As you can see, I've included Largest Contentful Paint alongside Start Render in this chart, for reasons I explain below.

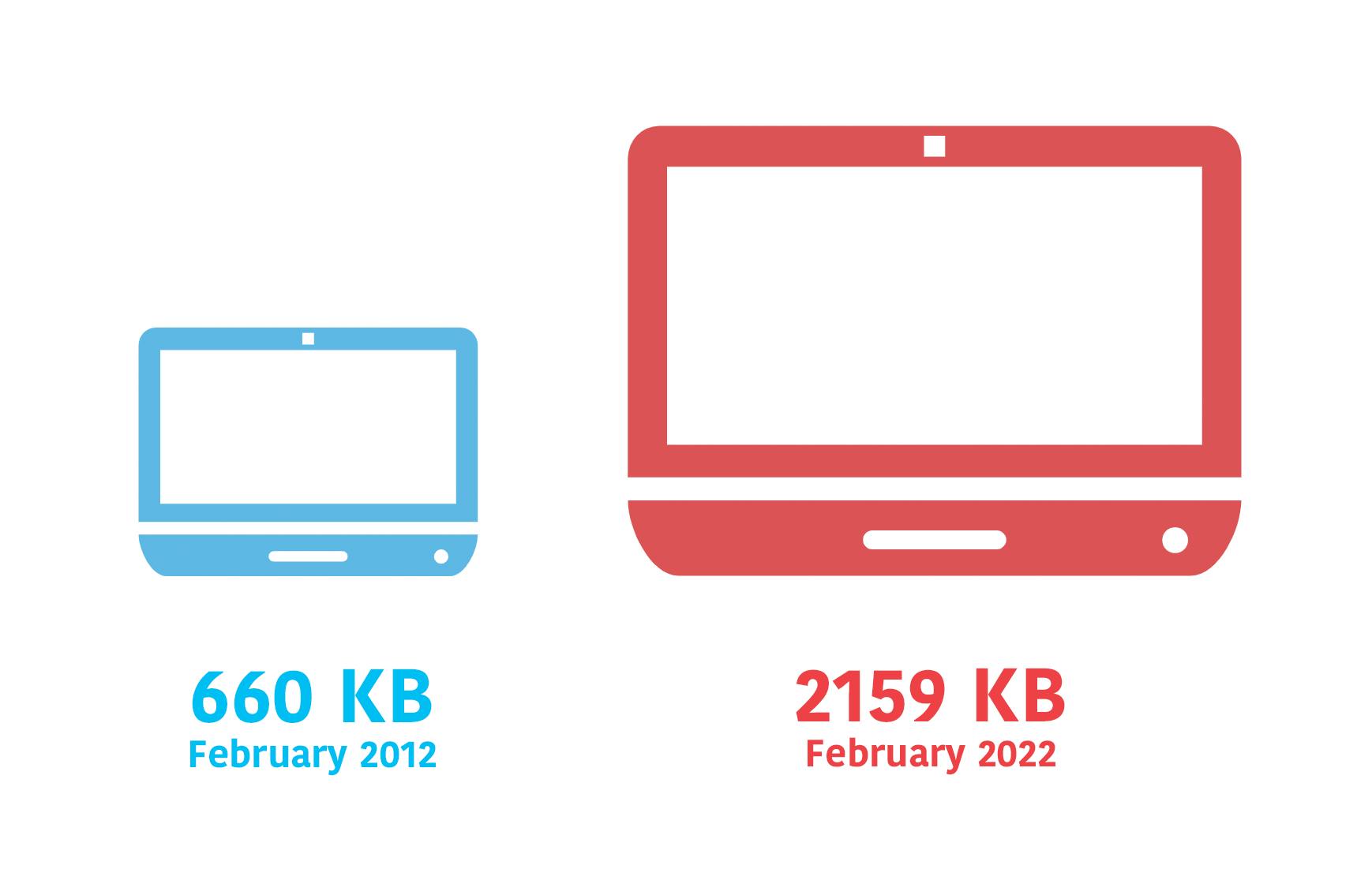

Ten years of page bloat: What have we learned?

(See our more recent page growth post: What is page bloat? And how is it hurting your business, search rank, and users?)

I've been writing about page size and complexity for years. If you've been working in the performance space for a while and you hear me start to talk about page growth, I'd forgive you if you started running away. ;)

But pages keep getting bigger and more complex year over year – and this increasing size and complexity is not fully mitigated by faster devices and networks, or by our hard-working browsers. Clearly we need to keep talking about it. We need to understand how ever-growing pages work against us. And we need to have strategies in place to understand and manage our pages.

In this post, we'll cover:

- How big are pages today versus ten years ago?

- How does page bloat hurt your business?

- How does page bloat affect other metrics, such as Google's Core Web Vitals?

- Is it possible to have large pages that deliver a good user experience?

- What can we do to manage our pages and fight regression?

NEW: RUM Live and Page Views dashboards

Shortly before the end of the year, we snuck in a couple of last-minute gifts for 2021. It was a great year for SpeedCurve with a lot of renewed focus on RUM. We couldn't think of a better way to finish out the year than to launch the new Live and Page Views dashboards. Let's take a look!

2021 in review: It was a big year!

This is the most important question we ask ourselves regularly here at SpeedCurve:

Does this bring happiness?

"This" can mean anything from design and features to our own internal processes. We apply this litmus test to everything we do, and I believe it's the secret of our success. It's why we have such an incredible team, such wonderful customers, and such a heartfelt commitment to building tools that make your life easier... and ultimately make your users' lives easier, too!

2021 has been a full year. We celebrated our eighth birthday – which I think makes us about eighty-eight in tech years? We've also grown our team, expanded our customer base, completed a full-scale site redesign, launched our in-house consulting practice, and refined our tools and processes. Here are some of the highlights...

SEO and web performance: What to measure and how to optimize

Chances are, you're here because of Google's update to its search algorithm, which affects both desktop and mobile, and which includes Core Web Vitals as a ranking factor. You may also be here because you've heard about the most recent potential candidates for addition to Core Web Vitals, which were just announced at Chrome Dev Summit.

A few things are clear:

- Core Web Vitals, as a premise, are here to stay for a while.

- The metrics that comprise Web Vitals are still evolving.

- These metrics will (I think) always be in a state of evolution. That's a good thing. We need to do our best to stay up to date – not just with which metrics to track, but also with what they measure and why they're important.

If you're new to Core Web Vitals, this is a Google initiative that was launched in early 2020. Web Vitals is (currently) a set of three metrics – Largest Contentful Paint, First Input Delay, and Cumulative Layout Shift – that are intended to measure the loading, interactivity, and visual stability of a page.

When Google talks, people listen. I talk with a lot of companies and I can attest that, since Web Vitals were announced, they've shot to the top of many people's list of things to care about. But Google's prioritization of page speed in search ranking isn't new, even for mobile. As far back as 2013, Google announced that pages that load slowly on mobile devices would be penalized in mobile search.

Keep reading to find out:

- How much does web performance matter when it comes to SEO?

- Which performance metrics should you focus on for SEO?

- What can you do to make your pages faster for SEO purposes?

- What are some of the common issues that can hurt your Web Vitals?

- How can you track performance for SEO?

New and improved Support Hub!

One of the things I love about SpeedCurve is our commitment to writing help documents that actually help. Every time we release a new feature, we make sure to give you an accompanying support doc – often written by the same team member who led the feature development. Luckily, we have great writers on our team, so our docs are exceptionally clear, concise, and easy to follow (if I do say so).

We just celebrated our eighth birthday – hooray! Eight years of building new features means eight years worth of support docs. That's a lot of docs! Earlier this year, we realized that we had well over a hundred articles in our support centre. Inevitably, some duplication had crept in and some dead wood had accumulated. So we decided to give our docs a complete overhaul. That meant editing, organizing, purging – we gave them the full KonMari treatment.

Our brand-new Support Hub is live! Here's a quick overview of what you can expect to find, including new goodies like our "Web Performance 101" guides, as well as recipes for completing common tasks and our improved and expanded API documentation.